import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

az.style.use("arviz-darkgrid")

SEED = 1211Regression splines (Cherry blossom example)

This example shows how to specify and fit a spline regression in Bambi. This example is based on this example from the PyMC docs.

Load Cherry Blossom data

Richard McElreath popularized the Cherry Blossom dataset in the second edition of his excellent book Statistical Rethinking. This data represents the day in the year when the first bloom is observed for Japanese cherry blossoms between years 801 and 2015. In his book, Richard McElreath uses this dataset to introduce Basis Splines, or B-Splines in short.

Here we use Bambi to fit a linear model using B-Splines with the Cherry Blossom data. This dataset can be loaded with Bambi as follows:

data = bmb.load_data("cherry_blossoms")

data| year | doy | temp | temp_upper | temp_lower | |

|---|---|---|---|---|---|

| 0 | 801 | NaN | NaN | NaN | NaN |

| 1 | 802 | NaN | NaN | NaN | NaN |

| 2 | 803 | NaN | NaN | NaN | NaN |

| 3 | 804 | NaN | NaN | NaN | NaN |

| 4 | 805 | NaN | NaN | NaN | NaN |

| ... | ... | ... | ... | ... | ... |

| 1210 | 2011 | 99.0 | NaN | NaN | NaN |

| 1211 | 2012 | 101.0 | NaN | NaN | NaN |

| 1212 | 2013 | 93.0 | NaN | NaN | NaN |

| 1213 | 2014 | 94.0 | NaN | NaN | NaN |

| 1214 | 2015 | 93.0 | NaN | NaN | NaN |

1215 rows × 5 columns

The variable we are interested in modeling is "doy", which stands for Day of Year. Also notice this variable contains several missing values which are discarded next.

data = data.dropna(subset=["doy"]).reset_index(drop=True)

data.shape(827, 5)Explore the data

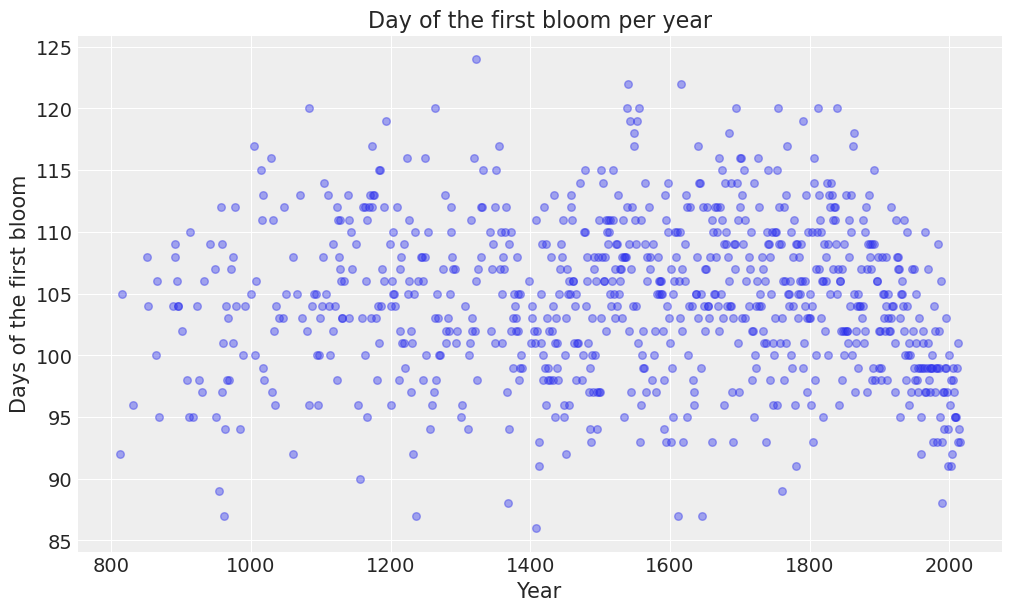

Let’s get started by creating a scatterplot to explore the values of "doy" for each year in the dataset.

# We create a function because this plot is going to be used again later

def plot_scatter(data, ax, color="C0"):

ax.scatter(data["year"], data["doy"], alpha=0.4, s=30, color=color)

ax.set_title("Day of the first bloom per year")

ax.set_xlabel("Year")

ax.set_ylabel("Days of the first bloom")

return axfig, ax = plt.subplots(figsize=(10, 6))

plot_scatter(data, ax=ax);

We can observe the day of the first bloom ranges between 85 and 125 approximately, which correspond to late March and early May respectively. On average, the first bloom occurs on the 105th day of the year, which is middle April.

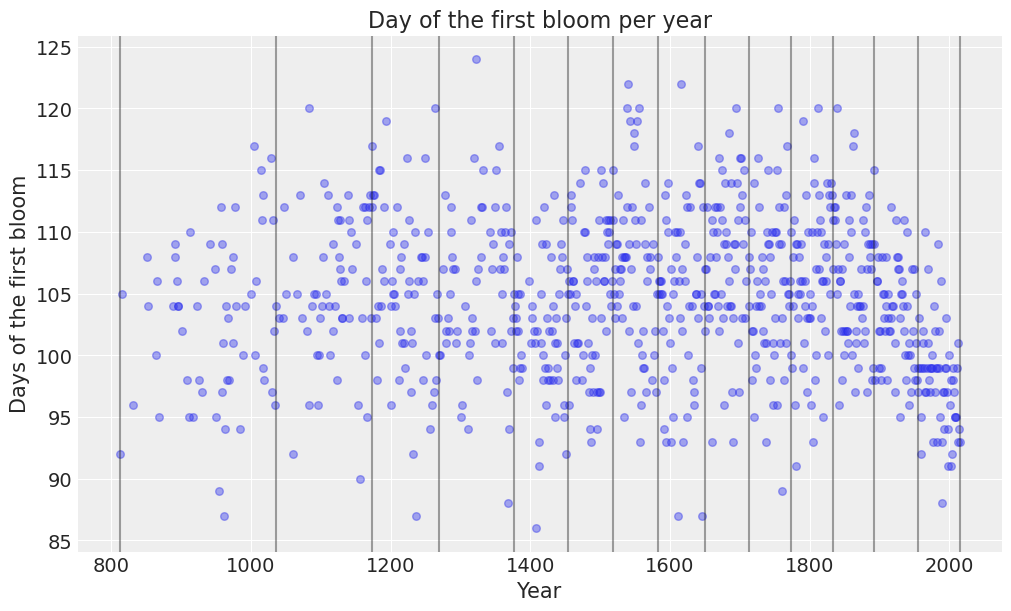

Determine knots

The spline will have 15 knots. These knots are the boundaries of the basis functions. These knots split the range of the "year" variable into 16 contiguous sections. The basis functions make up a piecewise continuous polynomial, and so they are enforced to meet at the knots. We use the default degree for each piecewise polynomial, which is 3. The result is known as a cubic spline.

Because of using quantiles and not having observations for all the years in the time window under study, the knots are distributed unevenly over the range of "year" in such a way that the same proportion of values fall between each section.

num_knots = 15

knots = np.quantile(data["year"], np.linspace(0, 1, num_knots))def plot_knots(knots, ax):

for knot in knots:

ax.axvline(knot, color="0.1", alpha=0.4)

return axfig, ax = plt.subplots(figsize=(10, 6))

plot_scatter(data, ax=ax)

plot_knots(knots, ax);

The previous chart makes it easy to see the knots, represented by the vertical lines, are spaced unevenly over the years.

The model

The B-spline model we are about to create is simply a linear regression model with synthetic predictor variables. These predictors are the basis functions that are derived from the original year predictor.

In math notation, we usa a \(\text{Normal}\) distribution for the conditional distribution of \(Y\) when \(X = x_i\), i.e. \(Y_i\), the distribution of the day of the first bloom in a given year.

\[ Y_i \sim \text{Normal}(\mu_i, \sigma) \]

So far, this looks like a regular linear regression model. The next line is where the spline comes into play:

\[ \mu_i = \alpha + \sum_{k=1}^K{w_kB_{k, i}} \]

The line above tells that for each observation \(i\), the mean is influenced by all the basis functions (going from \(k=1\) to \(k=K\)), plus an intercept \(\alpha\). The \(w_k\) values in the summation are the regression coefficients of each of the basis functions, and the \(B_k\) are the values of the basis functions.

Finally, we will be using the following priors

\[ \begin{aligned} \alpha & \sim \text{Normal}(100, 10) \\ w_j & \sim \text{Normal}(0, 10)\\ \sigma & \sim \text{Exponential(1)} \end{aligned} \]

where \(j\) indexes each of the contiguous sections given by the knots

# We only pass the internal knots to the `bs()` function.

iknots = knots[1:-1]

# Define dictionary of priors

priors = {

"Intercept": bmb.Prior("Normal", mu=100, sigma=10),

"common": bmb.Prior("Normal", mu=0, sigma=10),

"sigma": bmb.Prior("Exponential", lam=1)

}

# Define model

data["year"] = data["year"].astype(float)

# The intercept=True means the basis also spans the intercept, as originally done in the book example.

model = bmb.Model("doy ~ bs(year, knots=iknots, intercept=True)", data, priors=priors)

model Formula: doy ~ bs(year, knots=iknots, intercept=True)

Family: gaussian

Link: mu = identity

Observations: 827

Priors:

target = mu

Common-level effects

Intercept ~ Normal(mu: 100.0, sigma: 10.0)

bs(year, knots=iknots, intercept=True) ~ Normal(mu: 0.0, sigma: 10.0)

Auxiliary parameters

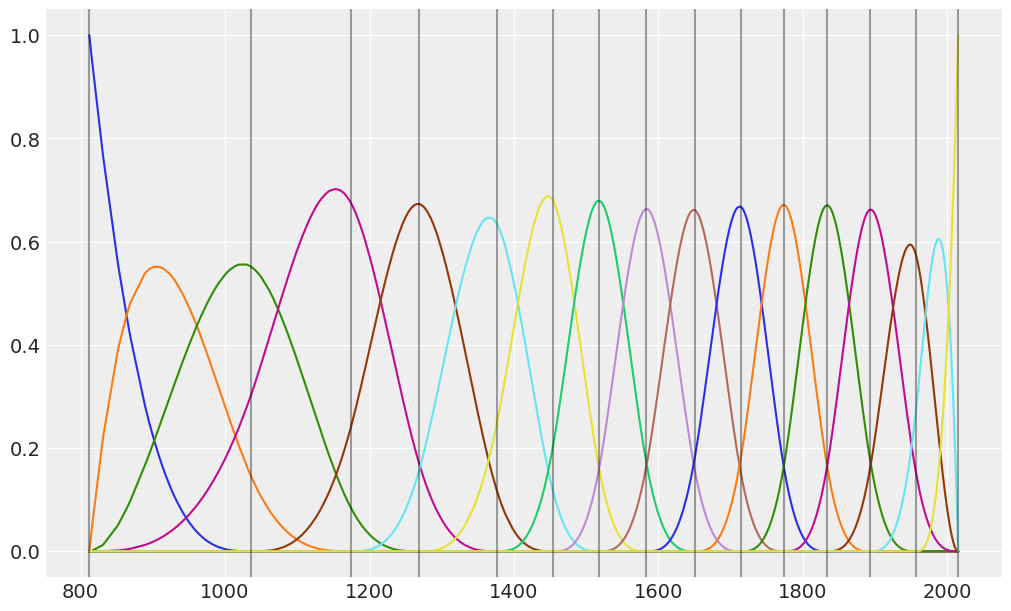

sigma ~ Exponential(lam: 1.0)Let’s create a function to plot each of the basis functions in the model.

def plot_spline_basis(basis, year, figsize=(10, 6)):

df = (

pd.DataFrame(basis)

.assign(year=year)

.melt("year", var_name="basis_idx", value_name="value")

)

_, ax = plt.subplots(figsize=figsize)

for idx in df.basis_idx.unique():

d = df[df.basis_idx == idx]

ax.plot(d["year"], d["value"])

return axBelow, we create a chart to visualize the b-spline basis. The overlap between the functions means that, at any given point in time, the regression function is influenced by more than one basis function. For example, if we look at the year 1200, we can see the regression line is going to be influenced mostly by the violet and brown functions, and to a lesser extent by the green and cyan ones. In summary, this is what enables us to capture local patterns in a smooth fashion.

B = model.components["mu"].design.common["bs(year, knots=iknots, intercept=True)"]

ax = plot_spline_basis(B, data["year"].values)

plot_knots(knots, ax);

Fit model

Now we fit the model. In Bambi, it is as easy as calling the .fit() method on the Model instance.

# The seed is to make results reproducible

idata = model.fit(random_seed=SEED, idata_kwargs={"log_likelihood": True})Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, Intercept, bs(year, knots=iknots, intercept=True)]/home/tomas/Desktop/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)

/home/tomas/Desktop/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 2 seconds.Analisys of the results

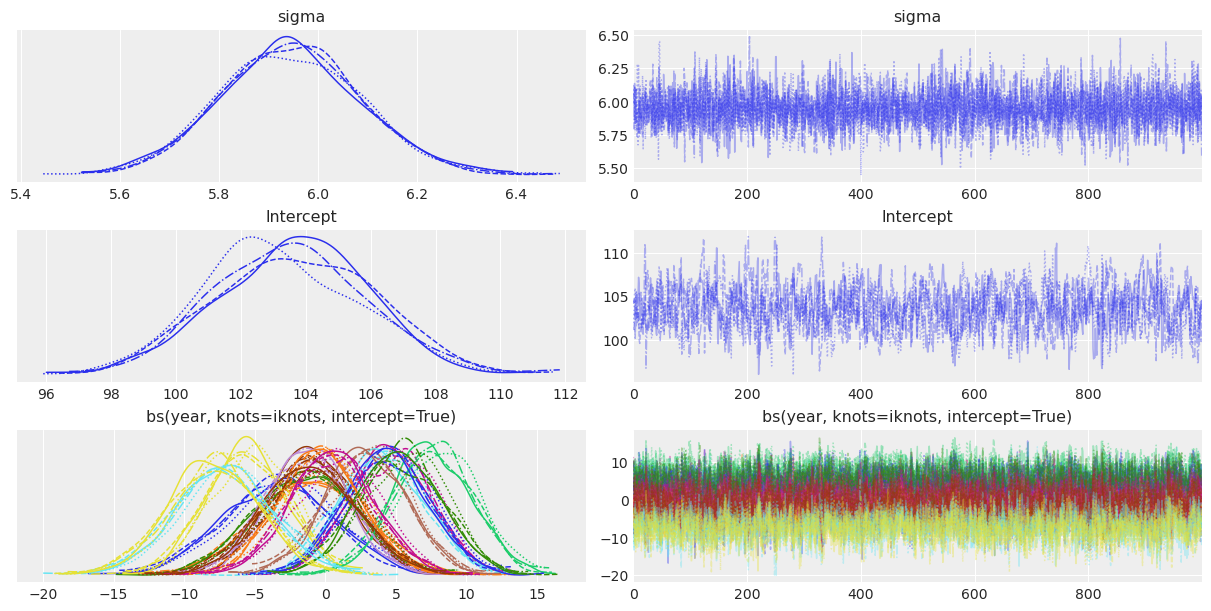

It is always good to use az.summary() to verify parameter estimates as well as effective sample sizes and R hat values. In this case, the main goal is not to interpret the coefficients of the basis spline, but analyze the ess and r_hat diagnostics. In first place, effective sample sizes don’t look impressively high. Most of them are between 300 and 700, which is low compared to the 2000 draws obtained. The only exception is the residual standard deviation sigma. Finally, the r_hat diagnostic is not always 1 for all the parameters, indicating there may be some issues with the mix of the chains.

az.summary(idata)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| sigma | 5.945 | 0.143 | 5.679 | 6.214 | 0.002 | 0.002 | 5191.0 | 2573.0 | 1.00 |

| Intercept | 103.517 | 2.415 | 99.013 | 107.954 | 0.093 | 0.051 | 678.0 | 1071.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[0] | -3.201 | 3.938 | -10.260 | 4.459 | 0.099 | 0.055 | 1567.0 | 2156.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[1] | -1.007 | 3.853 | -8.336 | 5.898 | 0.103 | 0.056 | 1388.0 | 2209.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[2] | -1.247 | 3.573 | -7.605 | 5.914 | 0.098 | 0.051 | 1348.0 | 2223.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[3] | 4.671 | 2.905 | -0.852 | 9.815 | 0.095 | 0.048 | 938.0 | 1913.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[4] | -1.001 | 2.952 | -6.262 | 4.728 | 0.099 | 0.049 | 898.0 | 1775.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[5] | 4.131 | 2.971 | -1.446 | 9.511 | 0.095 | 0.048 | 977.0 | 1683.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[6] | -5.484 | 2.869 | -10.626 | -0.004 | 0.096 | 0.047 | 905.0 | 1815.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[7] | 7.672 | 2.860 | 2.385 | 13.065 | 0.095 | 0.049 | 898.0 | 1501.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[8] | -1.171 | 2.920 | -6.539 | 4.302 | 0.097 | 0.049 | 912.0 | 1623.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[9] | 2.882 | 2.930 | -2.502 | 8.495 | 0.092 | 0.045 | 1009.0 | 1911.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[10] | 4.494 | 2.978 | -0.774 | 10.295 | 0.096 | 0.048 | 957.0 | 1819.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[11] | -0.340 | 2.903 | -5.678 | 5.113 | 0.094 | 0.047 | 954.0 | 1758.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[12] | 5.399 | 2.957 | -0.550 | 10.582 | 0.098 | 0.047 | 920.0 | 2016.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[13] | 0.554 | 3.050 | -4.988 | 6.484 | 0.095 | 0.051 | 1034.0 | 2152.0 | 1.01 |

| bs(year, knots=iknots, intercept=True)[14] | -0.960 | 3.378 | -7.379 | 5.364 | 0.107 | 0.053 | 997.0 | 1963.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[15] | -7.103 | 3.439 | -13.379 | -0.408 | 0.091 | 0.051 | 1430.0 | 2339.0 | 1.00 |

| bs(year, knots=iknots, intercept=True)[16] | -7.789 | 3.268 | -14.206 | -1.863 | 0.094 | 0.053 | 1200.0 | 1920.0 | 1.00 |

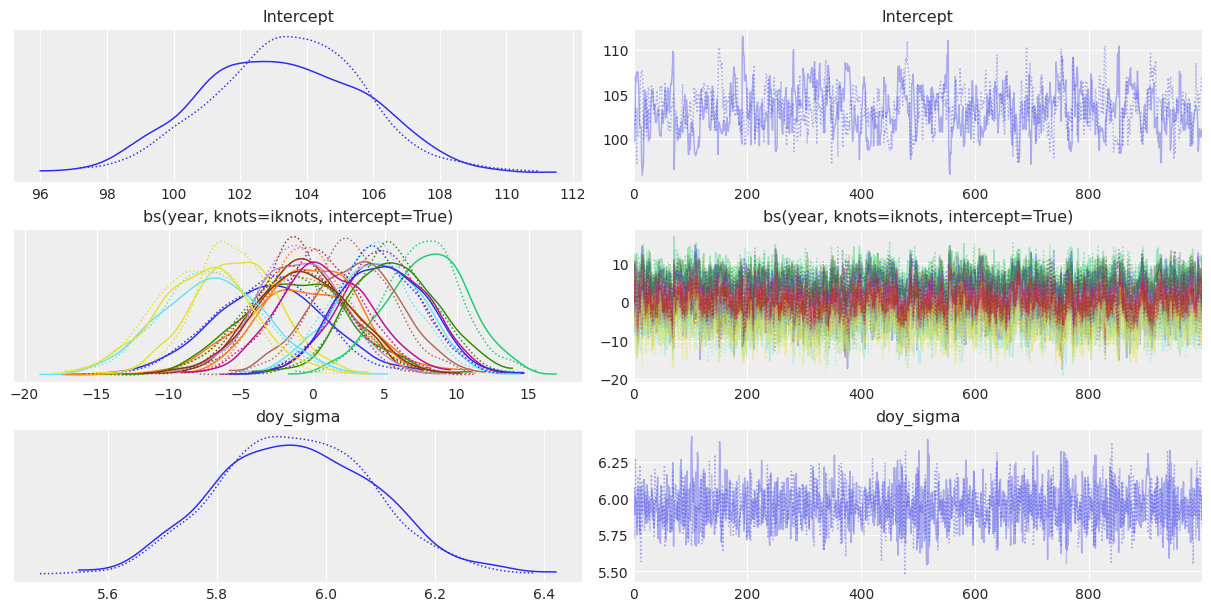

We can also use az.plot_trace() to visualize the marginal posteriors and the sampling paths. These traces show a stationary random pattern. If these paths were not random stationary, we would be concerned about the convergence of the chains.

az.plot_trace(idata);

Now we can visualize the fitted basis functions. In addition, we include a thicker black line that represents the dot product between \(B\) and \(w\). This is the contribution of the b-spline to the linear predictor in the model.

posterior_stacked = az.extract(idata)

wp = posterior_stacked["bs(year, knots=iknots, intercept=True)"].mean("sample").values

ax = plot_spline_basis(B * wp.T, data["year"].values)

ax.plot(data.year.values, np.dot(B, wp.T), color="black", lw=3)

plot_knots(knots, ax);

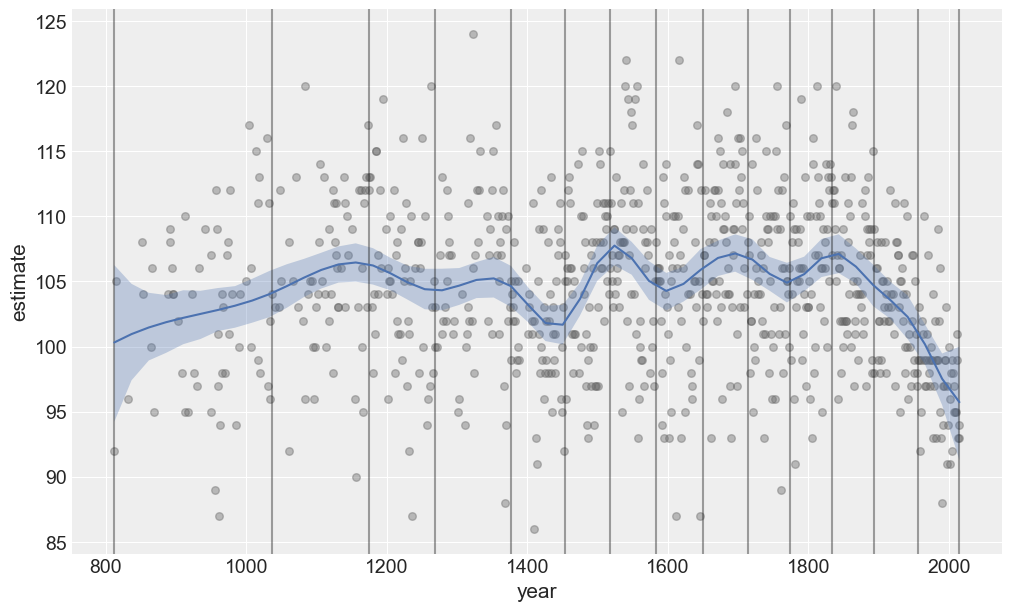

Plot predictions and credible bands

Let’s create a function to plot the predicted mean value as well as credible bands for it.

def plot_predictions(data, idata, model):

_, ax = plt.subplots(figsize=(10, 6))

# Plot predictions

p1 = bmb.interpret.plot_predictions(model, idata, conditional="year")

# Plot obserevd data

plot_scatter(data, ax, color="0.4")

# Add knots

plot_knots(knots, ax)

p1.on(ax).plot().show()plot_predictions(data, idata, model)

Advanced: Watch out the underlying design matrix

We can write linear regression models in matrix form as

\[ \mathbf{y} = \mathbf{X}\boldsymbol{\beta} \]

where \(\mathbf{y}\) is the response column vector of shape \((n, 1)\). \(\mathbf{X}\) is the design matrix that contains the values of the predictors for all the observations, of shape \((n, p)\). And \(\boldsymbol{\beta}\) is the column vector of regression coefficients of shape \((n, 1)\).

Because it’s not something that you’re supposed to consult regularly, Bambi does not expose the design matrix. However, with some knowledge of the internals, it is possible to have access to it:

np.round(model.components["mu"].design.common.design_matrix, 3)array([[1. , 1. , 0. , ..., 0. , 0. , 0. ],

[1. , 0.96 , 0.039, ..., 0. , 0. , 0. ],

[1. , 0.767, 0.221, ..., 0. , 0. , 0. ],

...,

[1. , 0. , 0. , ..., 0.002, 0.097, 0.902],

[1. , 0. , 0. , ..., 0. , 0.05 , 0.95 ],

[1. , 0. , 0. , ..., 0. , 0. , 1. ]], shape=(827, 18))Let’s have a look at its shape:

model.components["mu"].design.common.design_matrix.shape(827, 18)827 is the number of years we have data for, and 18 is the number of predictors/coefficients in the model. We have the first column of ones due to the Intercept term. Then, there are sixteen columns associated with the the basis functions. And finally, one extra column because we used span_intercept=True when calling the function bs() in the model formula.

Now we could compute the rank of the design matrix to check whether all the columns are linearly independent.

np.linalg.matrix_rank(model.components["mu"].design.common.design_matrix)np.int64(17)Since \(\text{rank}(\mathbf{X})\) is smaller than the number of columns, we conclude the columns in \(\mathbf{X}\) are not linearly independent.

If we have a second look at our code, we are going to figure out we’re spanning the intercept twice. The first time with the intercept term itself, and the second time in the spline basis.

This would have been a huge problem in a maximum likelihod estimation approach – we would have obtained an error instead of some parameter estimates. However, since we are doing Bayesian modeling, our priors ensured we obtain our regularized parameter estimates and everything seemed to work pretty well.

Nevertheless, we can still do better. Why would we want to span the intercept twice? Let’s create and fit the model again, this time without spanning the intercept in the spline basis.

# Note we use the same priors

model_new = bmb.Model("doy ~ bs(year, knots=iknots)", data, priors=priors)

idata_new = model_new.fit(random_seed=SEED, idata_kwargs={"log_likelihood": True})Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, Intercept, bs(year, knots=iknots)]/home/tomas/Desktop/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)

/home/tomas/Desktop/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 2 seconds.And let’s have a look at the summary

az.summary(idata_new)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| sigma | 5.944 | 0.148 | 5.696 | 6.247 | 0.002 | 0.002 | 5566.0 | 2907.0 | 1.0 |

| Intercept | 102.512 | 1.959 | 99.050 | 106.343 | 0.062 | 0.035 | 1015.0 | 1504.0 | 1.0 |

| bs(year, knots=iknots)[0] | -1.074 | 3.852 | -8.062 | 6.366 | 0.100 | 0.052 | 1479.0 | 2562.0 | 1.0 |

| bs(year, knots=iknots)[1] | 0.261 | 3.075 | -5.637 | 5.834 | 0.061 | 0.044 | 2523.0 | 2594.0 | 1.0 |

| bs(year, knots=iknots)[2] | 5.541 | 2.648 | 0.644 | 10.516 | 0.070 | 0.036 | 1446.0 | 2311.0 | 1.0 |

| bs(year, knots=iknots)[3] | 0.086 | 2.512 | -4.735 | 4.696 | 0.064 | 0.034 | 1561.0 | 2508.0 | 1.0 |

| bs(year, knots=iknots)[4] | 5.102 | 2.649 | 0.438 | 10.343 | 0.067 | 0.037 | 1568.0 | 2607.0 | 1.0 |

| bs(year, knots=iknots)[5] | -4.484 | 2.454 | -9.393 | -0.102 | 0.064 | 0.035 | 1457.0 | 2448.0 | 1.0 |

| bs(year, knots=iknots)[6] | 8.644 | 2.516 | 3.625 | 13.128 | 0.070 | 0.036 | 1323.0 | 2117.0 | 1.0 |

| bs(year, knots=iknots)[7] | -0.126 | 2.602 | -4.697 | 5.037 | 0.065 | 0.036 | 1621.0 | 2413.0 | 1.0 |

| bs(year, knots=iknots)[8] | 3.847 | 2.602 | -1.043 | 8.609 | 0.066 | 0.040 | 1570.0 | 2465.0 | 1.0 |

| bs(year, knots=iknots)[9] | 5.522 | 2.618 | 0.726 | 10.455 | 0.066 | 0.035 | 1593.0 | 2552.0 | 1.0 |

| bs(year, knots=iknots)[10] | 0.680 | 2.563 | -4.204 | 5.494 | 0.067 | 0.039 | 1487.0 | 1994.0 | 1.0 |

| bs(year, knots=iknots)[11] | 6.378 | 2.581 | 1.494 | 11.213 | 0.065 | 0.034 | 1592.0 | 2538.0 | 1.0 |

| bs(year, knots=iknots)[12] | 1.580 | 2.686 | -3.738 | 6.571 | 0.068 | 0.038 | 1569.0 | 2215.0 | 1.0 |

| bs(year, knots=iknots)[13] | -0.029 | 2.997 | -5.798 | 5.478 | 0.071 | 0.043 | 1785.0 | 2814.0 | 1.0 |

| bs(year, knots=iknots)[14] | -6.105 | 3.109 | -11.693 | -0.050 | 0.071 | 0.041 | 1920.0 | 2894.0 | 1.0 |

| bs(year, knots=iknots)[15] | -6.924 | 2.955 | -12.425 | -1.316 | 0.071 | 0.039 | 1750.0 | 2438.0 | 1.0 |

There are a couple of things to remark here

- There are 16 coefficients associated with the b-spline now because we’re not spanning the intercept.

- The ESS numbers have improved in all cases. Notice the sampler isn’t raising any warning about low ESS.

- r_hat coefficients are still 1.

We can also compare the sampling times:

idata.posterior.sampling_time1.9597249031066895idata_new.posterior.sampling_time1.9691383838653564Sampling times are similar in this particular example. But in general, we expect the sampler to run faster when there aren’t structural dependencies in the design matrix.

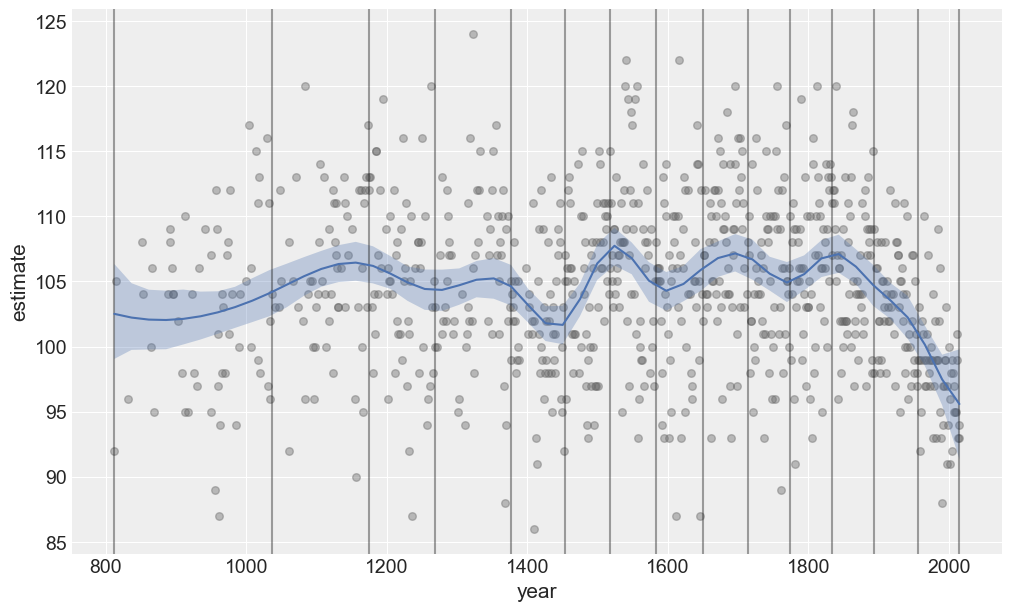

And what about predictions?

plot_predictions(data, idata_new, model_new);

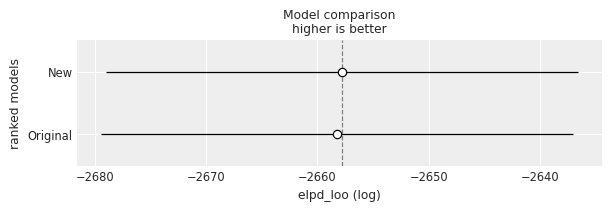

And model comparison?

models_dict = {"Original": idata, "New": idata_new}

df_compare = az.compare(models_dict)

df_compare| rank | elpd_loo | p_loo | elpd_diff | weight | se | dse | warning | scale | |

|---|---|---|---|---|---|---|---|---|---|

| New | 0 | -2657.965506 | 16.079688 | 0.000000 | 1.0 | 21.178851 | 0.000000 | False | log |

| Original | 1 | -2658.242689 | 16.526185 | 0.277183 | 0.0 | 21.170011 | 0.588634 | False | log |

az.plot_compare(df_compare, insample_dev=False);

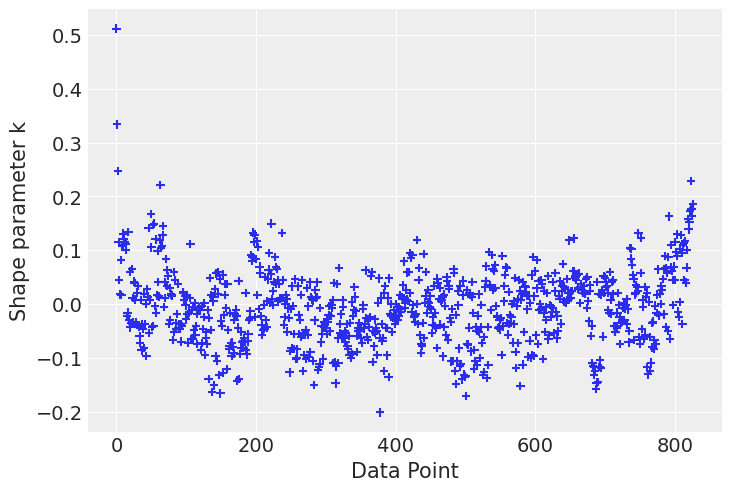

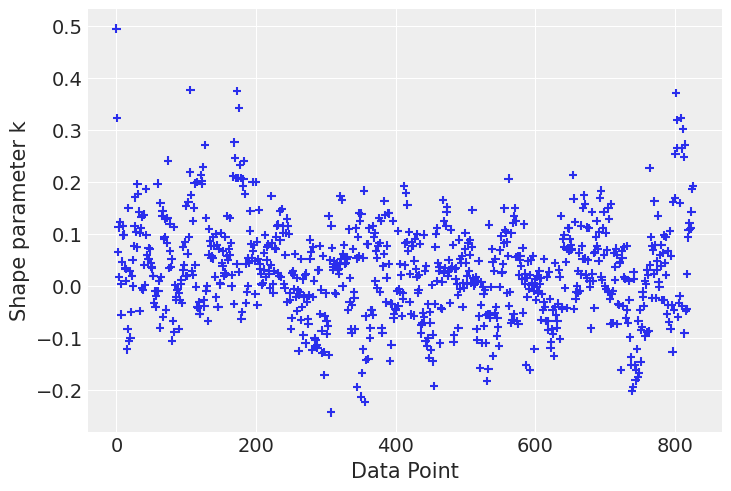

Finally let’s check influential points according to the k-hat value

# Compute pointwise LOO

loo_1 = az.loo(idata, pointwise=True)

loo_2 = az.loo(idata_new, pointwise=True)# plot kappa values

az.plot_khat(loo_1.pareto_k);/home/tomas/Desktop/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/arviz/plots/khatplot.py:184: FutureWarning: support for DataArrays will be deprecated, please use ELPDData.The reason for this, is that we need to know the numbers of drawssampled from the posterior

warnings.warn(

az.plot_khat(loo_2.pareto_k);

Final comments

Another option could have been to use stronger priors on the coefficients associated with the spline functions. For example, the example written in PyMC uses \(\text{Normal}(0, 3)\) priors on them instead of \(\text{Normal}(0, 10)\).

%load_ext watermark

%watermark -n -u -v -iv -wLast updated: Wed Feb 18 2026

Python implementation: CPython

Python version : 3.13.9

IPython version : 9.6.0

bambi : 0.16.1.dev42+g0d5870261.d20260218

matplotlib: 3.10.7

pandas : 2.3.3

numpy : 2.3.3

arviz : 0.22.0

Watermark: 2.5.0