import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

az.style.use("arviz-darkgrid")Count Regression with Variable Exposure

This example is based on the “Roaches” example from Regression and Other Stories by Gelman, Hill, and Vehtari. The example is a count regression model with an offset term.

The data is the number of roaches caught in 262 apartments. Some pest control treatment was applied to 158 (treatment=1) of the apartments, and 104 apartments received no treatment (treatment=0). The other columns in the data are:

y: the number of roaches caughtroach1: the pre-treatment roach levelsenior: indicator for whether the appartment is for seniorsexposure2: the number of trap-days (number of traps x number of days).

roaches = pd.read_csv("data/roaches.csv", index_col=0)

# rescale

roaches["roach1"] = roaches["roach1"] / 100

roaches.head()| y | roach1 | treatment | senior | exposure2 | |

|---|---|---|---|---|---|

| 1 | 153 | 3.0800 | 1 | 0 | 0.800000 |

| 2 | 127 | 3.3125 | 1 | 0 | 0.600000 |

| 3 | 7 | 0.0167 | 1 | 0 | 1.000000 |

| 4 | 7 | 0.0300 | 1 | 0 | 1.000000 |

| 5 | 0 | 0.0200 | 1 | 0 | 1.142857 |

Poisson regression

One way to model this is to say that there is some rate of roaches per trap-day , and that the number of roaches caught is a Poisson random variable with a rate that is proportional to the number of trap-days (the exposure). That is:

\[ \begin{align*} y_i &\sim \text{Poisson}(\text{exposure2}_i \times \rho_i) \\ \log(\rho_i) &= \beta_0 + \beta_1 \text{treatment}_i + \beta_2 \text{roach1}_i + \beta_3 \text{senior}_i \end{align*} \]

With a little algebra, we can rewrite this as a generalized linear model:

\[ \begin{align*} y_i &\sim \text{Poisson}(\lambda_i) \\ \log(\lambda_i) &= \beta_0 + \beta_1 \text{treatment}_i + \beta_2 \text{roach1}_i + \beta_3 \text{senior}_i + \log(\text{exposure2}_i) \end{align*} \]

However, we don’t want to estimate a coefficient for \(\log(\text{exposure2})\), we want to simply add it as an offset. In bambi we do this by using the offset function in the formula to specify that a term should not be multiplied by a coefficient to estimate and simply added. The formula for the model is then:

"y ~ roach1 + treatment + senior + offset(log(exposure2))"If you are familiar with R this offset term is the same as the offset term in the glm function.

model_1 = bmb.Model(

"y ~ roach1 + treatment + senior + offset(log(exposure2))",

family="poisson",

data=roaches

)

idata_1 = model_1.fit()Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [Intercept, roach1, treatment, senior]

/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)

/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)

/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 2 seconds.az.summary(idata_1)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 3.089 | 0.021 | 3.047 | 3.126 | 0.000 | 0.0 | 3984.0 | 3168.0 | 1.0 |

| roach1 | 0.698 | 0.009 | 0.682 | 0.715 | 0.000 | 0.0 | 3244.0 | 2652.0 | 1.0 |

| treatment | -0.516 | 0.025 | -0.561 | -0.467 | 0.000 | 0.0 | 4011.0 | 3088.0 | 1.0 |

| senior | -0.381 | 0.034 | -0.443 | -0.317 | 0.001 | 0.0 | 4217.0 | 3239.0 | 1.0 |

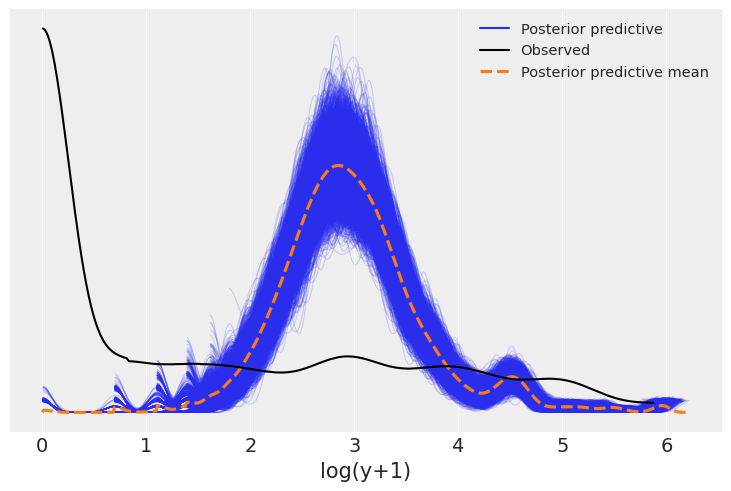

The sampling seems to have gone well based on ess and r_hat. If this were a real analysis one would also need to check priors, trace plots and other diagnostics. However, let’s see if the model predicts the distribution of roaches (y) observed. We can do this by looking at the posterior predictive distribution for the model. We plot the log of y to make the results easier to see.

def plot_log_posterior_ppc(model, idata):

# plot posterior predictive check

model.predict(idata, kind="response", inplace=True)

var_name = "log(y+1)"

# there is probably a better way

idata.posterior_predictive[var_name] = np.log(idata.posterior_predictive["y"] + 1)

idata.observed_data[var_name] = np.log(idata.observed_data["y"] + 1)

return az.plot_ppc(idata, var_names=[var_name])plot_log_posterior_ppc(model_1, idata_1);

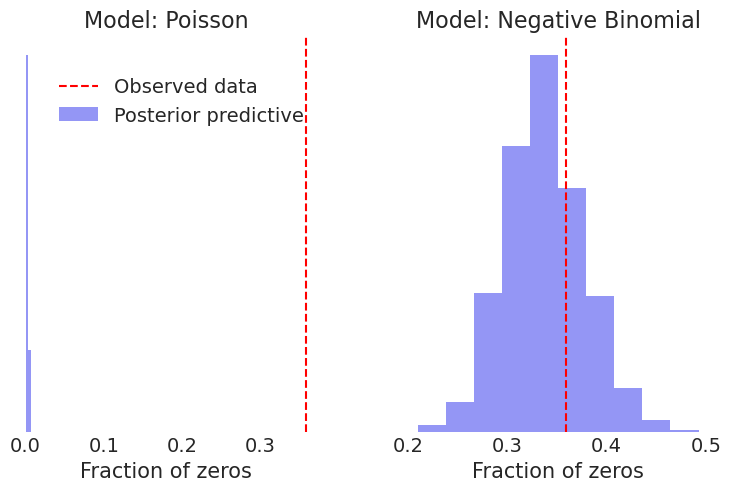

It appears that we are drastically under predicting the number of apartments with a small number of roaches. This suggests creating a test statistic measuring the fraction of zeros, both in the observed data and in the simulated replications (posterior predictions). We can then use this to check the model fit.

# check number of zeros in y

def check_zeros(idata):

# flatten over chains:

sampled_zeros = (idata.posterior_predictive["y"]==0).mean(("__obs__")).values.flatten()

print(f"Fraction of zeros in the observed data: {np.mean(roaches['y']==0)}")

print(f"Fraction of zeros in the posterior predictive check: {np.mean(sampled_zeros)}")

print(f" 80% CI: {np.percentile(sampled_zeros, [10, 90])}")

check_zeros(idata_1)Fraction of zeros in the observed data: 0.35877862595419846

Fraction of zeros in the posterior predictive check: 0.0006908396946564885

80% CI: [0. 0.00381679]The Poisson model here does not succeed in reproducing the observed fraction of zeros. In the data we have about 36% zeros, while in the replications we almost always have no zeros or very few. Gelman, Hill, and Vehtari suggest we try an overdispersed and more flexible model like the negative binomial.

Negative Binomial Fit

model_2 = bmb.Model(

"y ~ roach1 + treatment + senior + offset(log(exposure2))",

family="negativebinomial",

data=roaches

)

idata_2 = model_2.fit()Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [alpha, Intercept, roach1, treatment, senior]Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 2 seconds.az.summary(idata_2)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| alpha | 0.272 | 0.026 | 0.227 | 0.324 | 0.000 | 0.000 | 5974.0 | 3132.0 | 1.0 |

| Intercept | 2.851 | 0.237 | 2.414 | 3.293 | 0.003 | 0.004 | 5478.0 | 3249.0 | 1.0 |

| roach1 | 1.322 | 0.253 | 0.848 | 1.799 | 0.004 | 0.004 | 4698.0 | 3254.0 | 1.0 |

| treatment | -0.783 | 0.251 | -1.238 | -0.300 | 0.004 | 0.004 | 4998.0 | 3330.0 | 1.0 |

| senior | -0.324 | 0.260 | -0.813 | 0.160 | 0.003 | 0.004 | 5572.0 | 3363.0 | 1.0 |

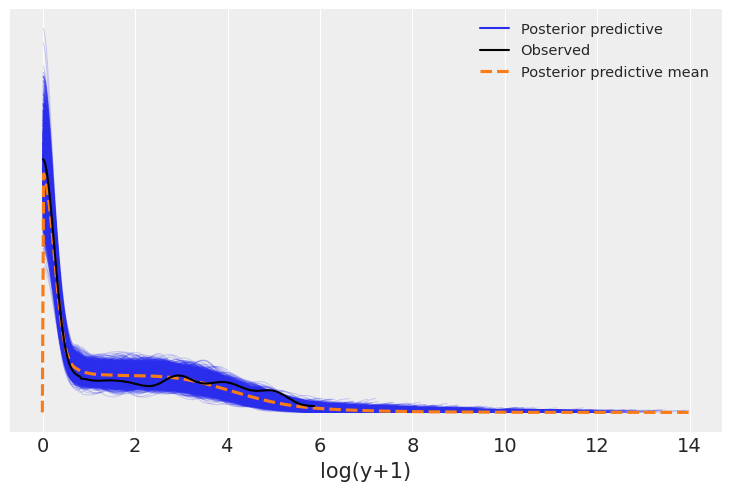

plot_log_posterior_ppc(model_2, idata_2);

check_zeros(idata_2)Fraction of zeros in the observed data: 0.35877862595419846

Fraction of zeros in the posterior predictive check: 0.3383148854961832

80% CI: [0.29007634 0.38931298]def plot_zeros(ax, idata, model_label, **kwargs):

data_zeros = np.mean(roaches['y']==0)

# flatten over chains:

sampled_zeros = (idata.posterior_predictive["y"]==0).mean(("__obs__")).values.flatten()

ax.hist(sampled_zeros, alpha=0.5, **kwargs)

ax.axvline(data_zeros, color='red', linestyle='--')

ax.set_xlabel("Fraction of zeros")

ax.set_title(f"Model: {model_label}")

ax.yaxis.set_visible(False)

ax.set_facecolor('white')

return ax

fig, ax = plt.subplots(1,2, gridspec_kw={'wspace': 0.2})

plot_zeros(ax[0],idata_1, "Poisson", bins= 2) # use 2 bins to make it more clear. Almost no zeros.

plot_zeros(ax[1],idata_2, "Negative Binomial")

fig.legend(["Observed data", "Posterior predictive"], loc='center left', bbox_to_anchor=(0.05, 0.8));

The negative binomial distribution fit works much better, predicting the number of zeros consistent with the observed data.

Regression and Other Stories introduces a further improvement by introducing a zero-inflated regression later in the chapter, but I will not persue that here, after all the point of this example is to illustrate the use of offsets.

PyMC equivalent model

The model behind the scenes looks like this for the Poission model.

pymc_model = model_1.backend

pymc_model.model\[ \begin{array}{rcl} \text{Intercept} &\sim & \operatorname{Normal}(0,~4.52)\\\text{roach1} &\sim & \operatorname{Normal}(0,~3.33)\\\text{treatment} &\sim & \operatorname{Normal}(0,~5.11)\\\text{senior} &\sim & \operatorname{Normal}(0,~5.43)\\\text{mu} &\sim & \operatorname{Deterministic}(f(\text{Intercept},~\text{senior},~\text{treatment},~\text{roach1}))\\\text{y} &\sim & \operatorname{Poisson}(\text{mu}) \end{array} \]

Let’s look at the equivalent (Poisson) model in PYMC:

import pymc as pm

with pm.Model() as model_pymc:

# priors

alpha = pm.Normal("Intercept", mu=0, sigma=4.5)

beta_roach1 = pm.Normal("beta_roach1", mu=0, sigma=3.3)

beta_treatment = pm.Normal("beta_treatment", mu=0, sigma=5.11)

beta_senior = pm.Normal("beta_senior", mu=0, sigma=5.43)

# likelihood

# see no beta for exposure2

mu = pm.math.exp(

alpha

+ beta_roach1 * roaches["roach1"]

+ beta_treatment * roaches["treatment"]

+ beta_senior * roaches["senior"]

+ pm.math.log(roaches["exposure2"])

)

y = pm.Poisson("y", mu=mu, observed=roaches["y"])

idata_pymc = pm.sample(1000)

az.summary(idata_pymc)Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [Intercept, beta_roach1, beta_treatment, beta_senior]/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)

/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)

/home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/pymc/step_methods/hmc/quadpotential.py:316: RuntimeWarning: overflow encountered in dot

return 0.5 * np.dot(x, v_out)Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 2 seconds.| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 3.089 | 0.021 | 3.051 | 3.129 | 0.000 | 0.000 | 2865.0 | 2564.0 | 1.0 |

| beta_roach1 | 0.698 | 0.009 | 0.681 | 0.714 | 0.000 | 0.000 | 3031.0 | 2947.0 | 1.0 |

| beta_treatment | -0.516 | 0.025 | -0.562 | -0.469 | 0.000 | 0.000 | 2716.0 | 2597.0 | 1.0 |

| beta_senior | -0.380 | 0.034 | -0.440 | -0.314 | 0.001 | 0.001 | 3109.0 | 2771.0 | 1.0 |

In this model (model_pymc) we have the equivalent Poisson regression with everything explicit to illustrate what the ‘offset’ function is doing. It simply makes it possible to express a term like this in the formulae string in a bambi model.

%load_ext watermark

%watermark -n -u -v -iv -wLast updated: Sun Sep 28 2025

Python implementation: CPython

Python version : 3.13.7

IPython version : 9.4.0

matplotlib: 3.10.6

arviz : 0.22.0

bambi : 0.14.1.dev56+gd93591cd2.d20250927

pymc : 3.9.2+2907.g7a3db78e6

numpy : 2.3.3

pandas : 2.3.2

Watermark: 2.5.0