import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

az.style.use("arviz-darkgrid")

SEED = 1234Bayesian Workflow (Police Officer’s Dilemma)

Here we will analyze a dataset from experimental psychology in which a sample of 36 human participants engaged in what is called the shooter task, yielding 3600 responses and reaction times (100 from each subject). The link above gives some more information about the shooter task, but basically it is a sort of crude first-person-shooter video game in which the subject plays the role of a police officer. The subject views a variety of urban scenes, and in each round or “trial” a person or “target” appears on the screen after some random interval. This person is either Black or White (with 50% probability), and they are holding some object that is either a gun or some other object like a phone or wallet (with 50% probability). When a target appears, the subject has a very brief response window – 0.85 seconds in this particular experiment – within which to press one of two keyboard buttons indicating a “shoot” or “don’t shoot” response. Subjects receive points for correct and timely responses in each trial; subjects’ scores are penalized for incorrect reponses (i.e., shooting an unarmed person or failing to shoot an armed person) or if they don’t respond within the 0.85 response window. The goal of the task, from the subject’s perspective, is to maximize their score.

The typical findings in the shooter task are that

- Subjects are quicker to respond to armed targets than to unarmed targets, but are especially quick toward armed black targets and especially slow toward unarmed black targets.

- Subjects are more likely to shoot black targets than white targets, whether they are armed or not.

Load and examine data

shooter = pd.read_csv("data/shooter.csv", na_values=".")

shooter.head(10)| subject | target | trial | race | object | time | response | |

|---|---|---|---|---|---|---|---|

| 0 | 1 | w05 | 19 | white | nogun | 658.0 | correct |

| 1 | 2 | b07 | 19 | black | gun | 573.0 | correct |

| 2 | 3 | w05 | 19 | white | gun | 369.0 | correct |

| 3 | 4 | w07 | 19 | white | gun | 495.0 | correct |

| 4 | 5 | w15 | 19 | white | nogun | 483.0 | correct |

| 5 | 6 | w96 | 19 | white | nogun | 786.0 | correct |

| 6 | 7 | w13 | 19 | white | nogun | 519.0 | correct |

| 7 | 8 | w06 | 19 | white | nogun | 567.0 | correct |

| 8 | 9 | b14 | 19 | black | gun | 672.0 | incorrect |

| 9 | 10 | w90 | 19 | white | gun | 457.0 | correct |

The design of the experiment is such that the subject, target, and object (i.e., gun vs. no gun) factors are fully crossed: each subject views each target twice, once with a gun and once without a gun.

pd.crosstab(shooter["subject"], [shooter["target"], shooter["object"]])| target | b01 | b02 | b03 | b04 | b05 | ... | w95 | w96 | w97 | w98 | w99 | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| object | gun | nogun | gun | nogun | gun | nogun | gun | nogun | gun | nogun | ... | gun | nogun | gun | nogun | gun | nogun | gun | nogun | gun | nogun |

| subject | |||||||||||||||||||||

| 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 2 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 3 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 4 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 5 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 6 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 7 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 8 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 9 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 10 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 11 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 12 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 13 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 14 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 15 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 16 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 17 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 18 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 19 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 20 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 21 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 22 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 23 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 24 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 25 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 26 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 27 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 28 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 29 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 30 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 31 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 32 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 33 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 34 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 35 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

| 36 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | ... | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 | 1 |

36 rows × 100 columns

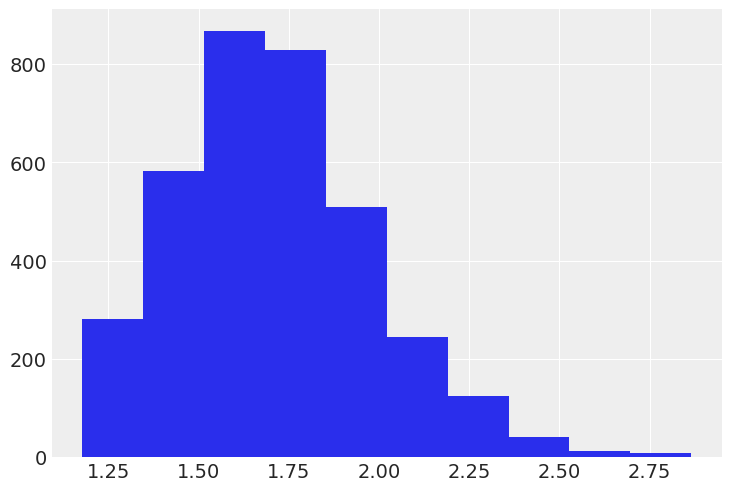

The response speeds on each trial are recorded given as reaction times (milliseconds per response), but here we invert them to and multiply by 1000 so that we are analyzing response rates (responses per second). There is no theoretical reason to prefer one of these metrics over the other, but it turns out that response rates tend to have nicer distributional properties than reaction times (i.e., deviate less strongly from the standard Gaussian assumptions), so response rates will be a little more convenient for us by allowing us to use some fairly standard distributional models.

shooter["rate"] = 1000.0 / shooter["time"]plt.hist(shooter["rate"].dropna());

Fit response rate models

Subject specific effects only

Our first model is analogous to how the data from the shooter task are usually analyzed: incorporating all subject-level sources of variability, but ignoring the sampling variability due to the sample of 50 targets. This is a Bayesian generalized linear mixed model (GLMM) with a Normal response and with intercepts and slopes that vary randomly across subjects.

Of note here is the S(x) syntax, which is from the Formulae library that we use to parse model formulas. This instructs Bambi to use contrast codes of -1 and +1 for the two levels of each of the common factors of race (black vs. white) and object (gun vs. no gun), so that the race and object coefficients can be interpreted as simple effects on average across the levels of the other factor (directly analogous, but not quite equivalent, to the main effects). This is the standard coding used in ANOVA.

subj_model = bmb.Model(

"rate ~ S(race) * S(object) + (S(race) * S(object) | subject)",

shooter,

dropna=True

)

subj_fitted = subj_model.fit(random_seed=SEED)Automatically removing 98/3600 rows from the dataset.

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, Intercept, S(race), S(object), S(race):S(object), 1|subject_sigma, 1|subject_offset, S(race)|subject_sigma, S(race)|subject_offset, S(object)|subject_sigma, S(object)|subject_offset, S(race):S(object)|subject_sigma, S(race):S(object)|subject_offset]Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 10 seconds.First let’s visualize the default priors that Bambi automatically decided on for each of the parameters. We do this by calling the .plot_priors() method of the Model object.

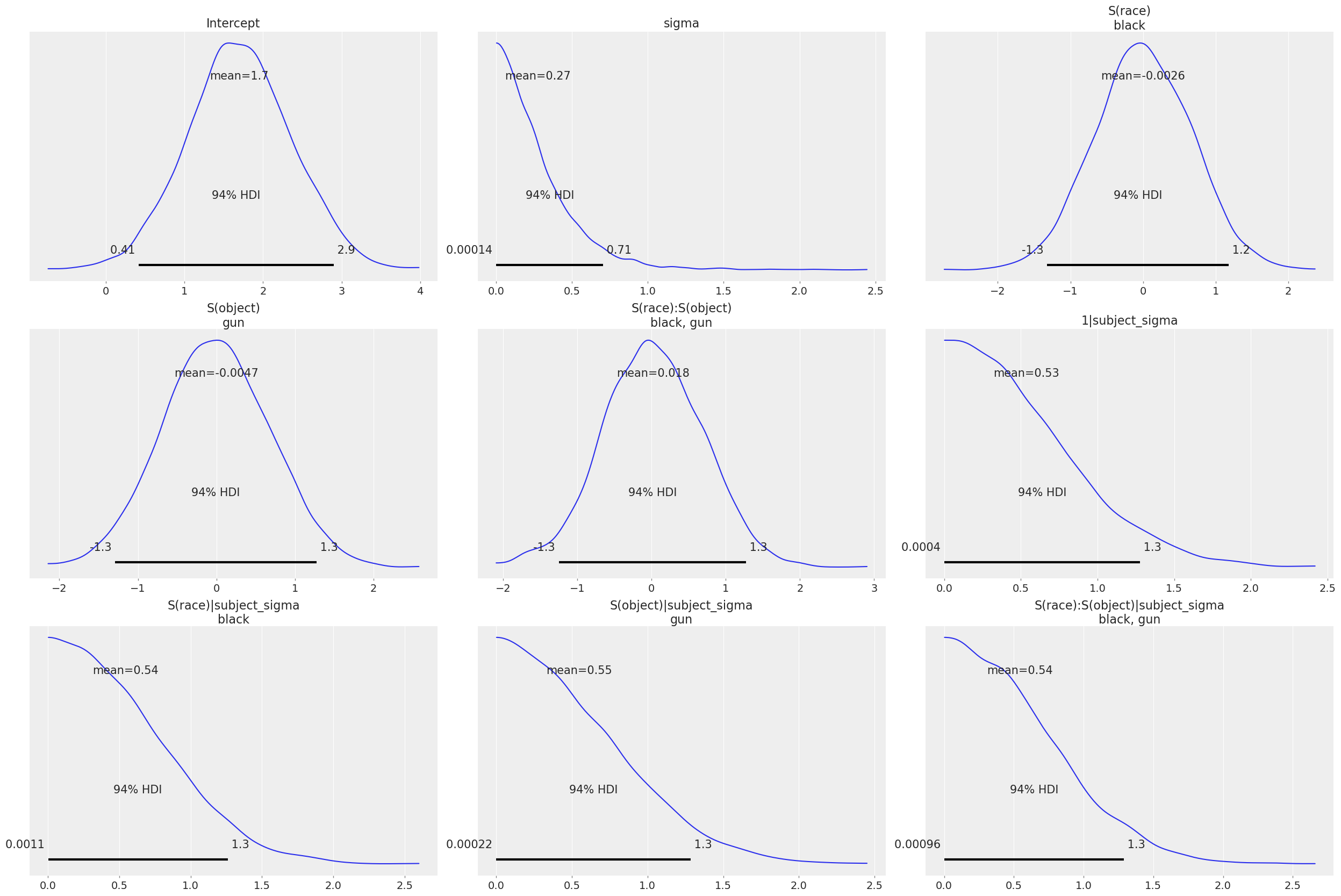

subj_model.plot_priors();Sampling: [1|subject_sigma, Intercept, S(object), S(object)|subject_sigma, S(race), S(race):S(object), S(race):S(object)|subject_sigma, S(race)|subject_sigma, sigma]

The priors on the common effects seem quite reasonable. Recall that because of the -1 vs +1 contrast coding, the coefficients correspond to half the difference between the two levels of each factor. So the priors on the common effects essentially say that the black vs. white and gun vs. no gun (and their interaction) response rate differences are very unlikely to be as large as a full response per second.

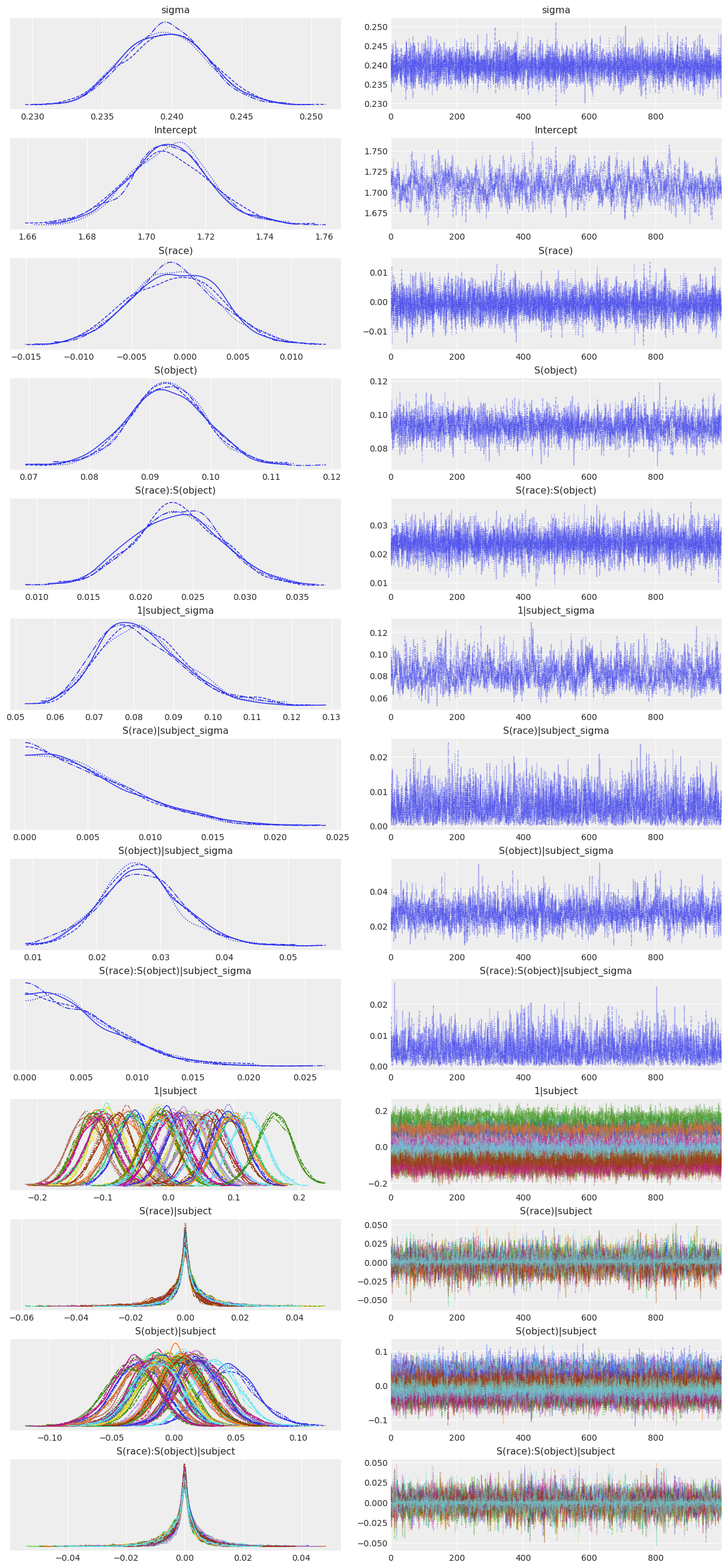

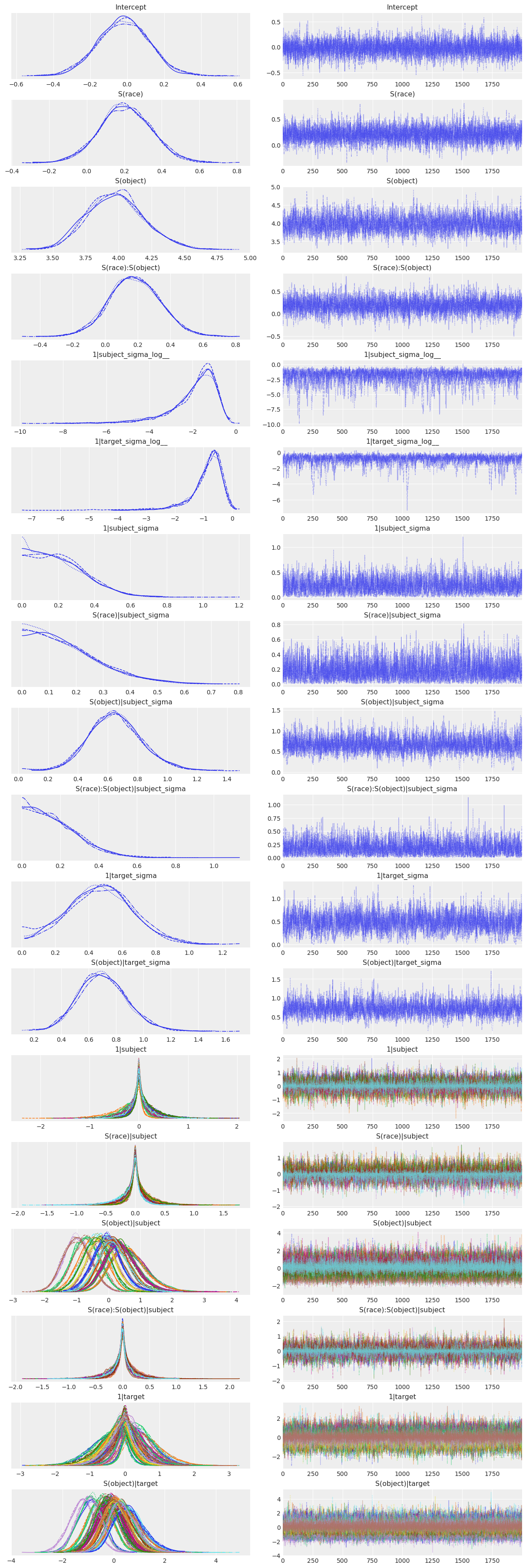

Now let’s visualize the model estimates. We do this by passing the InferenceData object that resulted from the Model.fit() call to az.plot_trace().

az.plot_trace(subj_fitted);

Each distribution in the plots above has 2 densities because we used 2 MCMC chains, so we are viewing the results of all 2 chains prior to their aggregation. The main message from the plot above is that the chains all seem to have converged well and the resulting posterior distributions all look quite reasonable. It’s a bit easier to digest all this information in a concise, tabular form, which we can get by passing the object that resulted from the Model.fit() call to az.summary().

az.summary(subj_fitted)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| sigma | 0.240 | 0.003 | 0.234 | 0.245 | 0.000 | 0.0 | 6285.0 | 3104.0 | 1.0 |

| Intercept | 1.708 | 0.014 | 1.679 | 1.733 | 0.001 | 0.0 | 701.0 | 1232.0 | 1.0 |

| S(race)[black] | -0.001 | 0.004 | -0.009 | 0.007 | 0.000 | 0.0 | 7130.0 | 3021.0 | 1.0 |

| S(object)[gun] | 0.093 | 0.006 | 0.080 | 0.104 | 0.000 | 0.0 | 2618.0 | 3025.0 | 1.0 |

| S(race):S(object)[black, gun] | 0.024 | 0.004 | 0.016 | 0.032 | 0.000 | 0.0 | 6326.0 | 3258.0 | 1.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| S(race):S(object)|subject[black, gun, 32] | -0.001 | 0.006 | -0.014 | 0.010 | 0.000 | 0.0 | 6294.0 | 2998.0 | 1.0 |

| S(race):S(object)|subject[black, gun, 33] | -0.000 | 0.006 | -0.012 | 0.012 | 0.000 | 0.0 | 6135.0 | 3184.0 | 1.0 |

| S(race):S(object)|subject[black, gun, 34] | -0.000 | 0.006 | -0.012 | 0.013 | 0.000 | 0.0 | 5632.0 | 3370.0 | 1.0 |

| S(race):S(object)|subject[black, gun, 35] | 0.000 | 0.006 | -0.012 | 0.012 | 0.000 | 0.0 | 6136.0 | 2966.0 | 1.0 |

| S(race):S(object)|subject[black, gun, 36] | -0.001 | 0.006 | -0.013 | 0.011 | 0.000 | 0.0 | 5639.0 | 3284.0 | 1.0 |

153 rows × 9 columns

The take-home message from the analysis seems to be that we do find evidence for the usual finding that subjects are especially quick to respond (presumably with a shoot response) to armed black targets and especially slow to respond to unarmed black targets (while unarmed white targets receive “don’t shoot” responses with less hesitation). We see this in the fact that the marginal posterior for the S(race):S(object) interaction coefficient is concentrated strongly away from 0.

Stimulus specific effects

A major flaw in the analysis above is that stimulus specific effects are ignored. The model does include group specific effects for subjects, reflecting the fact that the subjects we observed are but a sample from the broader population of subjects we are interested in and that potentially could have appeared in our study. But the targets we observed – the 50 photographs of white and black men that subjets responded to – are also but a sample from the broader theoretical population of targets we are interested in talking about, and that we could have just as easily and justifiably used as the experimental stimuli in the study. Since the stimuli comprise a random sample, they are subject to sampling variability, and this sampling variability should be accounted in the analysis by including stimulus specific effects. For some more information on this, see here, particularly pages 62-63.

To account for this, we let the intercept and slope for object be different for each target. Specific slopes for object across targets are possible because, if you recall, the design of the study was such that each target gets viewed twice by each subject, once with a gun and once without a gun. However, because each target is always either white or black, it’s not possible to add group specific slopes for the race factor or the interaction.

stim_model = bmb.Model(

"rate ~ S(race) * S(object) + (S(race) * S(object) | subject) + (S(object) | target)",

shooter,

dropna=True

)

stim_fitted = stim_model.fit(inference_method="nutpie", random_seed=SEED)Automatically removing 98/3600 rows from the dataset. /home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/nutpie/compile_pymc.py:782: NumbaPerformanceWarning: np.dot() is faster on contiguous arrays, called on (Array(float64, 2, 'C', True, aligned=True), Array(float64, 2, 'A', False, aligned=True)) return inner(x) /home/tomas/oss/bambinos/bambi/.pixi/envs/dev/lib/python3.13/site-packages/nutpie/compile_pymc.py:782: NumbaPerformanceWarning: np.dot() is faster on contiguous arrays, called on (Array(float64, 2, 'F', True, aligned=True), Array(float64, 2, 'A', False, aligned=True)) return inner(x)

Sampler Progress

Total Chains: 4

Active Chains: 0

Finished Chains: 4

Sampling for now

Estimated Time to Completion: now

| Progress | Draws | Divergences | Step Size | Gradients/Draw |

|---|---|---|---|---|

| 2000 | 0 | 0.31 | 15 | |

| 2000 | 0 | 0.31 | 15 | |

| 2000 | 0 | 0.31 | 15 | |

| 2000 | 0 | 0.32 | 15 |

Now let’s look at the results…

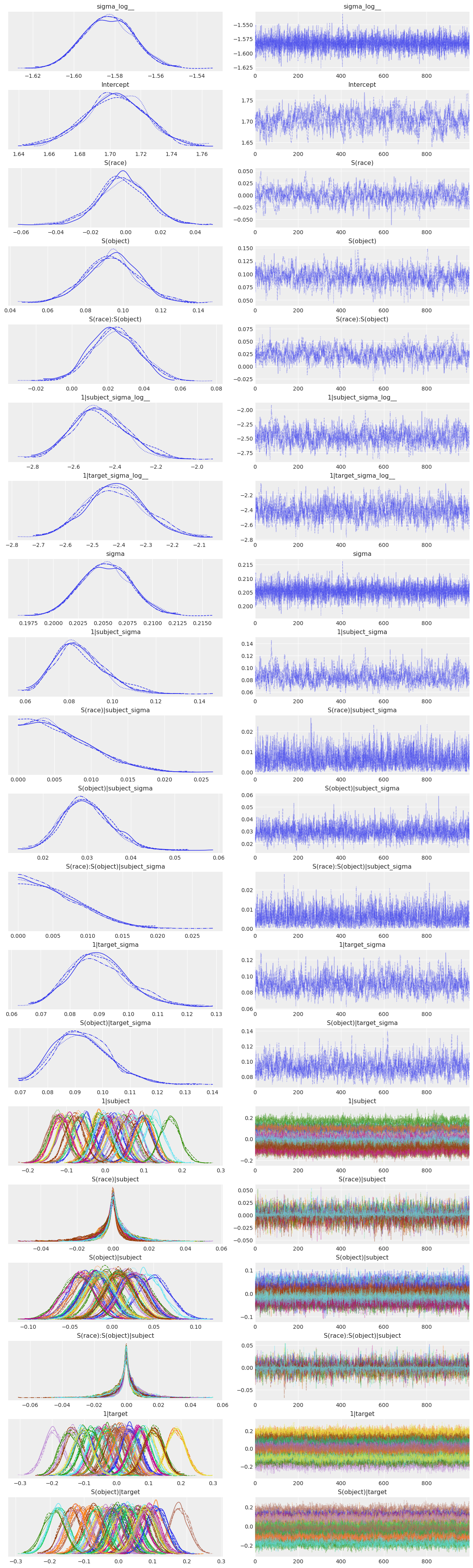

az.plot_trace(stim_fitted);

az.summary(stim_fitted)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| sigma_log__ | -1.583 | 0.012 | -1.605 | -1.559 | 0.000 | 0.000 | 7104.0 | 2924.0 | 1.00 |

| Intercept | 1.703 | 0.020 | 1.664 | 1.739 | 0.001 | 0.001 | 366.0 | 761.0 | 1.00 |

| S(race)[black] | -0.001 | 0.014 | -0.030 | 0.024 | 0.001 | 0.000 | 467.0 | 904.0 | 1.00 |

| S(object)[gun] | 0.094 | 0.014 | 0.067 | 0.122 | 0.001 | 0.000 | 565.0 | 901.0 | 1.02 |

| S(race):S(object)[black, gun] | 0.024 | 0.013 | 0.001 | 0.050 | 0.001 | 0.000 | 519.0 | 1024.0 | 1.01 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| S(object)|target[gun, w95] | -0.176 | 0.029 | -0.231 | -0.121 | 0.001 | 0.000 | 1126.0 | 1970.0 | 1.00 |

| S(object)|target[gun, w96] | 0.079 | 0.030 | 0.020 | 0.131 | 0.001 | 0.000 | 1152.0 | 1968.0 | 1.01 |

| S(object)|target[gun, w97] | 0.006 | 0.030 | -0.046 | 0.066 | 0.001 | 0.000 | 1284.0 | 2094.0 | 1.01 |

| S(object)|target[gun, w98] | 0.086 | 0.029 | 0.031 | 0.142 | 0.001 | 0.000 | 1036.0 | 2039.0 | 1.01 |

| S(object)|target[gun, w99] | -0.020 | 0.030 | -0.073 | 0.040 | 0.001 | 0.001 | 1195.0 | 1634.0 | 1.00 |

258 rows × 9 columns

There are two interesting things to note here. The first is that the key interaction effect, S(race):S(object) is much less clear now. The marginal posterior is still mostly concentrated away from 0, but there’s certainly a nontrivial part that overlaps with 0; 2.9% of the distribution, to be exact.

(stim_fitted.posterior["S(race):S(object)"] < 0).mean()<xarray.DataArray 'S(race):S(object)' ()> Size: 8B array(0.03225)

The second interesting thing is that the two new variance components in the model, those associated with the stimulus specific effects, are actually rather large. This actually largely explains the first fact above, since if these where estimated to be close to 0 anyway, the model estimates wouldn’t be much different than they were in the subj_model. It makes sense that there is a strong tendency for different targets to elicit difference reaction times on average, which leads to a large estimate of 1|target_sigma.

Less obviously, the large estimate of S(object)|target_sigma (targets tend to vary a lot in their response rate differences when they have a gun vs. some other object) also makes sense, because in this experiment, different targets were pictured with different non-gun objects. Some of these objects, such as a bright red can of Coca-Cola, are not easily confused with a gun, so subjects are able to quickly decide on the correct response. Other objects, such as a black cell phone, are possibly easier to confuse with a gun, so subjects take longer to decide on the correct response when confronted with this object.

Since each target is yoked to a particular non-gun object, there is good reason to expect large target-to-target variability in the object effect, which is indeed what we see in the model estimates.

Fit response models

Here we seek evidence of the second traditional finding, that subjects are more likely to response ‘shoot’ toward black targets than toward white targets, regardless of whether they are armed or not. Currently the dataset just records whether the given response was correct or not, so first we transformed this into whether the response was ‘shoot’ or ‘dontshoot’.

shooter["shoot_or_not"] = shooter["response"].astype(str)

# armed targets

new_values = {"correct": "shoot", "incorrect": "dontshoot", "timeout": np.nan}

shooter.loc[shooter["object"] == "gun", "shoot_or_not"] = (

shooter.loc[shooter["object"] == "gun", "response"].astype(str).replace(new_values)

)

# unarmed targets

new_values = {"correct": "dontshoot", "incorrect": "shoot", "timeout": np.nan}

shooter.loc[shooter["object"] == "nogun", "shoot_or_not"] = (

shooter.loc[shooter["object"] == "nogun", "response"].astype(str).replace(new_values)

)

# view result

shooter.head(20)| subject | target | trial | race | object | time | response | rate | shoot_or_not | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | w05 | 19 | white | nogun | 658.0 | correct | 1.519757 | dontshoot |

| 1 | 2 | b07 | 19 | black | gun | 573.0 | correct | 1.745201 | shoot |

| 2 | 3 | w05 | 19 | white | gun | 369.0 | correct | 2.710027 | shoot |

| 3 | 4 | w07 | 19 | white | gun | 495.0 | correct | 2.020202 | shoot |

| 4 | 5 | w15 | 19 | white | nogun | 483.0 | correct | 2.070393 | dontshoot |

| 5 | 6 | w96 | 19 | white | nogun | 786.0 | correct | 1.272265 | dontshoot |

| 6 | 7 | w13 | 19 | white | nogun | 519.0 | correct | 1.926782 | dontshoot |

| 7 | 8 | w06 | 19 | white | nogun | 567.0 | correct | 1.763668 | dontshoot |

| 8 | 9 | b14 | 19 | black | gun | 672.0 | incorrect | 1.488095 | dontshoot |

| 9 | 10 | w90 | 19 | white | gun | 457.0 | correct | 2.188184 | shoot |

| 10 | 11 | w91 | 19 | white | nogun | 599.0 | correct | 1.669449 | dontshoot |

| 11 | 12 | b17 | 19 | black | nogun | 769.0 | correct | 1.300390 | dontshoot |

| 12 | 13 | b04 | 19 | black | nogun | 600.0 | correct | 1.666667 | dontshoot |

| 13 | 14 | w17 | 19 | white | nogun | 653.0 | correct | 1.531394 | dontshoot |

| 14 | 15 | b93 | 19 | black | gun | 468.0 | correct | 2.136752 | shoot |

| 15 | 16 | w96 | 19 | white | gun | 546.0 | correct | 1.831502 | shoot |

| 16 | 17 | w91 | 19 | white | gun | 591.0 | incorrect | 1.692047 | dontshoot |

| 17 | 18 | b95 | 19 | black | gun | NaN | timeout | NaN | NaN |

| 18 | 19 | b09 | 19 | black | gun | 656.0 | correct | 1.524390 | shoot |

| 19 | 20 | b02 | 19 | black | gun | 617.0 | correct | 1.620746 | shoot |

Let’s skip straight to the correct model that includes stimulus specific effects. This looks quite similiar to the stim_model from above except that we change the response to the new shoot_or_not variable – notice the [shoot] syntax indicating that we wish to model the prbability that shoot_or_not=='shoot', not shoot_or_not=='dontshoot' – and then change to family='bernoulli' to indicate a mixed effects logistic regression.

stim_response_model = bmb.Model(

"shoot_or_not[shoot] ~ S(race)*S(object) + (S(race)*S(object) | subject) + (S(object) | target)",

shooter,

family="bernoulli",

dropna=True

)

# Note we increased target_accept from default 0.8 to 0.9 because there were divergences

stim_response_fitted = stim_response_model.fit(

inference_method="nutpie",

draws=2000,

target_accept=0.9,

random_seed=SEED

)Automatically removing 98/3600 rows from the dataset.

Modeling the probability that shoot_or_not==shootSampler Progress

Total Chains: 4

Active Chains: 0

Finished Chains: 4

Sampling for 12 seconds

Estimated Time to Completion: now

| Progress | Draws | Divergences | Step Size | Gradients/Draw |

|---|---|---|---|---|

| 3000 | 0 | 0.26 | 15 | |

| 3000 | 0 | 0.24 | 15 | |

| 3000 | 0 | 0.24 | 15 | |

| 3000 | 0 | 0.24 | 15 |

Show the trace plot

az.plot_trace(stim_response_fitted);

Looks pretty good! Now for the more concise summary.

az.summary(stim_response_fitted)| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | -0.019 | 0.141 | -0.282 | 0.245 | 0.002 | 0.002 | 4768.0 | 4758.0 | 1.0 |

| S(race)[black] | 0.208 | 0.139 | -0.063 | 0.458 | 0.002 | 0.002 | 4429.0 | 4656.0 | 1.0 |

| S(object)[gun] | 3.980 | 0.218 | 3.590 | 4.406 | 0.004 | 0.003 | 2408.0 | 3346.0 | 1.0 |

| S(race):S(object)[black, gun] | 0.181 | 0.157 | -0.117 | 0.473 | 0.003 | 0.002 | 3386.0 | 4798.0 | 1.0 |

| 1|subject_sigma_log__ | -1.943 | 1.121 | -4.047 | -0.396 | 0.033 | 0.047 | 1541.0 | 1200.0 | 1.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... | ... |

| S(object)|target[gun, w95] | 0.349 | 0.566 | -0.669 | 1.452 | 0.006 | 0.007 | 9348.0 | 5308.0 | 1.0 |

| S(object)|target[gun, w96] | 0.061 | 0.522 | -0.893 | 1.058 | 0.006 | 0.006 | 8873.0 | 6140.0 | 1.0 |

| S(object)|target[gun, w97] | 0.314 | 0.543 | -0.652 | 1.380 | 0.006 | 0.007 | 8162.0 | 6023.0 | 1.0 |

| S(object)|target[gun, w98] | 0.348 | 0.560 | -0.710 | 1.407 | 0.006 | 0.007 | 9269.0 | 5641.0 | 1.0 |

| S(object)|target[gun, w99] | 0.053 | 0.521 | -0.933 | 1.037 | 0.005 | 0.006 | 9767.0 | 6513.0 | 1.0 |

256 rows × 9 columns

There is some slight evidence here for the hypothesis that subjects are more likely to shoot the black targets, regardless of whether they are armed or not, but the evidence is not too strong. The marginal posterior for the S(race) coefficient is mostly concentrated away from 0, but it overlaps even more in this case with 0 than did the key interaction effect in the previous model.

(stim_response_fitted.posterior["S(race)"] < 0).mean()<xarray.DataArray 'S(race)' ()> Size: 8B array(0.062625)

%load_ext watermark

%watermark -n -u -v -iv -wLast updated: Sun Sep 28 2025

Python implementation: CPython

Python version : 3.13.7

IPython version : 9.4.0

bambi : 0.14.1.dev58+gb25742785.d20250928

matplotlib: 3.10.6

arviz : 0.22.0

pandas : 2.3.2

numpy : 2.3.3

Watermark: 2.5.0