import arviz as az

import bambi as bmb

import matplotlib.pyplot as plt

import numpy as np

import pandas as pd

from matplotlib.lines import Line2D

from scipy import stats

az.style.use("arviz-white")Circular Regression

Directional statistics, also known as circular statistics or spherical statistics, refers to a branch of statistics dealing with data which domain is the unit circle, as opposed to “linear” data which support is the real line. Circular data is convenient when dealing with directions or rotations. Some examples include temporal periods like hours or days, compass directions, dihedral angles in biomolecules, etc.

The fact that a Sunday can be both the day before or after a Monday, or that 0 is a “better average” for 2 and 358 degrees than 180 are illustrations that circular data and circular statistical methods are better equipped to deal with this kind of problem than the more familiar methods [1].

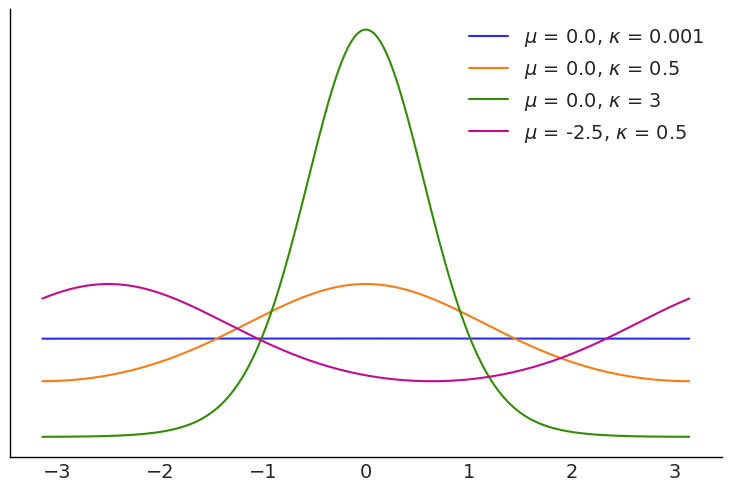

There are a few circular distributions, one of them is the VonMises distribution, that we can think as the cousin of the Gaussian that lives in circular space. The domain of this distribution is any interval of length \(2\pi\). We are going to adopt the convention that the interval goes from \(-\pi\) to \(\pi\), so for example 0 radians is the same as \(2\pi\). The VonMises is defined using two parameters, the mean \(\mu\) (the circular mean) and the concentration \(\kappa\), with \(\frac{1}{\kappa}\) being analogue of the variance. Let see a few examples of the VonMises family:

x = np.linspace(-np.pi, np.pi, 200)

mus = [0., 0., 0., -2.5]

kappas = [.001, 0.5, 3, 0.5]

for mu, kappa in zip(mus, kappas):

pdf = stats.vonmises.pdf(x, kappa, loc=mu)

plt.plot(x, pdf, label=r'$\mu$ = {}, $\kappa$ = {}'.format(mu, kappa))

plt.yticks([])

plt.legend(loc=1);

When doing linear regression a commonly used link function is \(2 \arctan(u)\) this ensure that values over the real line are mapped into the interval \([-\pi, \pi]\)

u = np.linspace(-12, 12, 200)

plt.plot(u, 2*np.arctan(u))

plt.xlabel("Reals")

plt.ylabel("Radians");

Bambi supports circular regression with the VonMises family, to exemplify this we are going to use a dataset from the following experiment. 31 periwinkles (a kind of sea snail) were removed from it original place and released down shore. Then, our task is to model the direction of motion as function of the distance travelled by them after being release.

data = bmb.load_data("periwinkles")

data["distance"] = data["distance"].astype(pd.Float64Dtype())

data.head()| distance | direction | |

|---|---|---|

| 0 | 107.0 | 1.169371 |

| 1 | 46.0 | 1.151917 |

| 2 | 33.0 | 1.291544 |

| 3 | 67.0 | 1.064651 |

| 4 | 122.0 | 1.012291 |

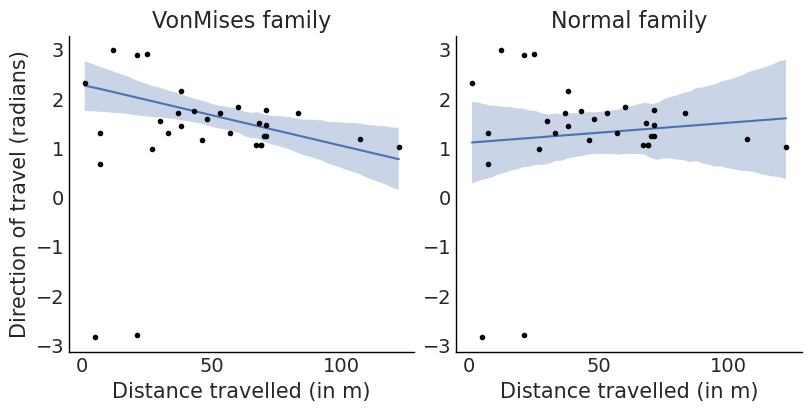

Just to compare results, we are going to use the VonMises family and the normal (default) family.

model_vm = bmb.Model("direction ~ distance", data, family="vonmises")

idata_vm = model_vm.fit(include_response_params=True)

model_n = bmb.Model("direction ~ distance", data)

idata_n = model_n.fit(include_response_params=True)Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [kappa, Intercept, distance]Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 1 seconds.

The rhat statistic is larger than 1.01 for some parameters. This indicates problems during sampling. See https://arxiv.org/abs/1903.08008 for details

The effective sample size per chain is smaller than 100 for some parameters. A higher number is needed for reliable rhat and ess computation. See https://arxiv.org/abs/1903.08008 for details

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, Intercept, distance]Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 1 seconds.az.summary(idata_vm, var_names=["~mu"])| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| kappa | 2.707 | 0.603 | 1.667 | 3.893 | 0.010 | 0.010 | 4006.0 | 2871.0 | 1.00 |

| Intercept | 0.710 | 2.734 | -4.291 | 2.757 | 1.356 | 0.779 | 7.0 | 31.0 | 1.53 |

| distance | -0.012 | 0.005 | -0.021 | -0.004 | 0.000 | 0.000 | 4484.0 | 2607.0 | 1.00 |

fig, axes = plt.subplots(1,2, figsize=(8, 4), sharey=True)

p1 = bmb.interpret.plot_predictions(model_vm, idata_vm, "distance")

p1.on(axes[0]).plot()

axes[0].plot(data.distance, data.direction, "k.")

axes[0].set(

xlabel="Distance travelled (in m)",

ylabel="Direction of travel (radians)",

title="VonMises family"

);

p2 = bmb.interpret.plot_predictions(model_n, idata_n, "distance")

p2.on(axes[1]).plot()

axes[1].plot(data.distance, data.direction, "k.")

axes[1].set(

xlabel="Distance travelled (in m)",

ylabel=None,

title="Normal family"

);

We can see that there is a negative relationship between distance and direction. This could be explained as Periwinkles travelling in a direction towards the sea travelled shorter distances than those travelling in directions away from it. From a biological perspective, this could have been due to a propensity of the periwinkles to stop moving once they are close to the sea.

We can also see that if inadvertently we had assumed a normal response we would have obtained a fit with higher uncertainty and more importantly the wrong sign for the relationship.

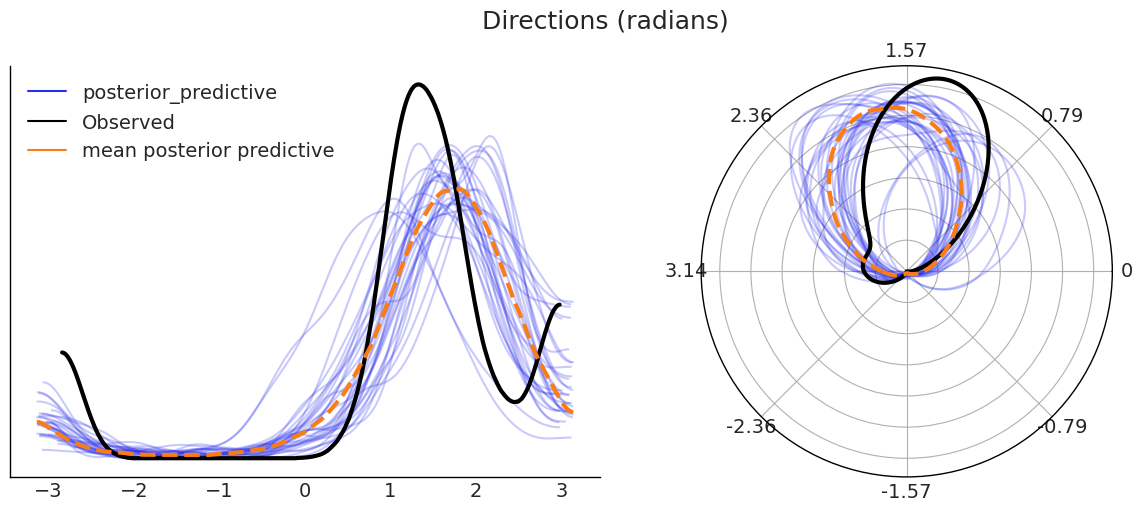

As a last step for this example we are going to do a posterior predictive check. In the figure below we have to panels showing the same data, with the only difference that the on the right is using a polar projection and the KDE are computing taking into account the circularity of the data.

We can see that our modeling is failing at capturing the bimodality in the data (with mode around 1.6 and \(\pm \pi\)) and hence the predicted distribution is wider and with a mean closer to \(\pm \pi\).

fig = plt.figure(figsize=(12, 5))

ax0 = plt.subplot(121)

ax1 = plt.subplot(122, projection="polar")

model_vm.predict(idata_vm, kind="response")

pp_samples = az.extract_dataset(idata_vm, group="posterior_predictive", num_samples=200)["direction"]

colors = ["C0" , "k", "C1"]

for ax, circ in zip((ax0, ax1), (False, "radians", colors)):

for s in pp_samples:

az.plot_kde(s.values, plot_kwargs={"color":colors[0], "alpha": 0.25}, is_circular=circ, ax=ax)

az.plot_kde(idata_vm.observed_data["direction"].values,

plot_kwargs={"color":colors[1], "lw":3}, is_circular=circ, ax=ax)

az.plot_kde(idata_vm.posterior_predictive["direction"].values,

plot_kwargs={"color":colors[2], "ls":"--", "lw":3}, is_circular=circ, ax=ax)

custom_lines = [Line2D([0], [0], color=c) for c in colors]

ax0.legend(custom_lines, ["posterior_predictive", "Observed", 'mean posterior predictive'])

ax0.set_yticks([])

fig.suptitle("Directions (radians)", fontsize=18);/var/folders/zf/8rkyygg940vcg480mvkfqwl40000gn/T/ipykernel_6351/706974292.py:6: FutureWarning: extract_dataset has been deprecated, please use extract

pp_samples = az.extract_dataset(idata_vm, group="posterior_predictive", num_samples=200)["direction"]

We have shown an example of regression where the response variable is circular and the covariates are linear. This is sometimes refereed as linear-circular regression in order to distinguish it from other cases. Namely, when the response is linear and the covariates (or at least one of them) is circular the name circular-linear regression is often used. And when both covariates and the response variables are circular, we have a circular-circular regression. When the covariates are circular they are usually modelled with the help of sin and cosine functions. You can read more about this kind of regression and other circular statistical methods in the following books.

- Circular statistics in R

- Modern directional statistics

- Applied Directional Statistics

- Directional Statistics

%load_ext watermark

%watermark -n -u -v -iv -wLast updated: Tue, 17 Feb 2026

Python implementation: CPython

Python version : 3.13.12

IPython version : 9.10.0

arviz : 0.23.4

bambi : 0.1.dev890+g3e105f2cc.d20260217

matplotlib: 3.10.8

numpy : 2.3.5

pandas : 2.3.3

scipy : 1.17.0

Watermark: 2.6.0