from glob import glob

import arviz as az

import bambi as bmb

import pandas as pd

az.style.use("arviz-darkgrid")Bayesian Workflow (Strack RRR Analysis Replication)

In this Jupyter notebook, we do a Bayesian reanalysis of the data reported in the recent registered replication report (RRR) of a famous study by Strack, Martin & Stepper (1988). The original Strack et al. study tested a facial feedback hypothesis arguing that emotional responses are, in part, driven by facial expressions (rather than expressions always following from emotions). Strack and colleagues reported that participants rated cartoons as more funny when the participants held a pen in their teeth (unknowingly inducing a smile) than when they held a pen between their lips (unknowingly inducing a pout). The article has been cited over 1,400 times, and has been enormously influential in popularizing the view that affective experiences and outward expressions of affective experiences can both influence each other (instead of the relationship being a one-way street from experience to expression). In 2016, a Registered Replication Report led by Wagenmakers and colleagues attempted to replicate Study 1 from Strack, Martin, & Stepper (1988) in 17 independent experiments comprising over 2,500 participants. The RRR reported no evidence in support of the effect.

Because the emphasis here is on fitting models in Bambi, we spend very little time on quality control and data exploration; our goal is simply to show how one can replicate and extend the primary analysis reported in the RRR in a few lines of Bambi code.

Reading in the data

The data for the RRR of Strack, Martin, & Stepper (henceforth SMS) is available as a set of CSV files from the project’s repository on the Open Science Framework. For the sake of completeness, we’ll show how to go from the raw CSV to the “long” data format that Bambi can use.

One slightly annoying thing about these 17 CSV files–each of which represents a different replication site–is that they don’t all contain exactly the same columns. Some labs added a column or two at the end (mostly for notes). To keep things simple, we’ll just truncate each dataset to only the first 22 columns. Because the variable names are structured in a bit of a confusing way, we’ll also just drop the first two rows in each file, and manually set the column names for all 22 variables. Once we’ve done that, we can simply concatenate all of the 17 datasets along the row axis to create one big dataset.

DL_PATH = 'data/facial_feedback/*csv'

dfs = []

columns = ['subject', 'cond_id', 'condition', 'correct_c1', 'correct_c2', 'correct_c3', 'correct_c4',

'correct_total', 'rating_t1', 'rating_t2', 'rating_c1', 'rating_c2', 'rating_c3',

'rating_c4', 'self_perf', 'comprehension', 'awareness', 'transcript', 'age', 'gender',

'student', 'occupation']

count = 0

for idx, study in enumerate(glob(DL_PATH)):

data = pd.read_csv(study, encoding='latin1', skiprows=2, header=None, index_col=False).iloc[:, :22]

data.columns = columns

# Add study name

data['study'] = idx

# Some sites used the same subject id numbering schemes, so prepend with study to create unique ids.

# Note that if we don't do this, Bambi would have no way of distinguishing two subjects who share

# the same id, which would hose our results.

data['uid'] = data['subject'].astype(float) + count

dfs.append(data)

data = pd.concat(dfs, axis=0).apply(pd.to_numeric, errors='coerce', axis=1)Let’s see what the first few rows look like…

data.head()| subject | cond_id | condition | correct_c1 | correct_c2 | correct_c3 | correct_c4 | correct_total | rating_t1 | rating_t2 | ... | self_perf | comprehension | awareness | transcript | age | gender | student | occupation | study | uid | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1.0 | 1.0 | 0.0 | 1.0 | 1.0 | 1.0 | 0.0 | 3.0 | 3.0 | 5.0 | ... | 5.0 | 1.0 | 0.0 | NaN | 21.0 | 0.0 | 1.0 | NaN | 0.0 | 1.0 |

| 1 | 2.0 | 2.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 9.0 | 8.0 | ... | 5.0 | 1.0 | 0.0 | NaN | 21.0 | 0.0 | 1.0 | NaN | 0.0 | 2.0 |

| 2 | 3.0 | 3.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 4.0 | 6.0 | 9.0 | ... | 7.0 | 1.0 | 0.0 | NaN | 21.0 | 1.0 | 1.0 | NaN | 0.0 | 3.0 |

| 3 | 4.0 | 4.0 | 1.0 | 1.0 | 1.0 | 1.0 | 1.0 | 4.0 | 9.0 | 9.0 | ... | 3.0 | 1.0 | 0.0 | NaN | 21.0 | 0.0 | 1.0 | NaN | 0.0 | 4.0 |

| 4 | 5.0 | 5.0 | 0.0 | 1.0 | 1.0 | 1.0 | 1.0 | 4.0 | 5.0 | 8.0 | ... | 4.0 | 0.0 | 0.0 | NaN | 18.0 | 0.0 | 1.0 | NaN | 0.0 | 5.0 |

5 rows × 24 columns

Reshaping the data

At this point we have our data in a pandas DataFrame with shape of (2612, 24). Unfortunately, we can’t use the data in this form. We’ll need to (a) conduct some basic quality control, and (b) “melt” the dataset–currently in so-called “wide” format, with each subject in a separate row–into long format, where each row is a single trial. Fortunately, we can do this easily in pandas:

# Keep only subjects who (i) respond appropriately on all trials,

# (ii) understand the cartoons, and (iii) don't report any awareness

# of the hypothesis or underlying theory.

valid = data.query('correct_total==4 and comprehension==1 and awareness==0')

long = pd.melt(valid, ['uid', 'condition', 'gender', 'age', 'study', 'self_perf'],

['rating_c1', 'rating_c2', 'rating_c3', 'rating_c4'], var_name='stimulus')long| uid | condition | gender | age | study | self_perf | stimulus | value | |

|---|---|---|---|---|---|---|---|---|

| 0 | 3.0 | 0.0 | 1.0 | 21.0 | 0.0 | 7.0 | rating_c1 | 2.0 |

| 1 | 4.0 | 1.0 | 0.0 | 21.0 | 0.0 | 3.0 | rating_c1 | 9.0 |

| 2 | 6.0 | 1.0 | 0.0 | 19.0 | 0.0 | 4.0 | rating_c1 | 0.0 |

| 3 | 8.0 | 1.0 | 0.0 | 21.0 | 0.0 | 9.0 | rating_c1 | 4.0 |

| 4 | 9.0 | 0.0 | 1.0 | 21.0 | 0.0 | 9.0 | rating_c1 | 0.0 |

| ... | ... | ... | ... | ... | ... | ... | ... | ... |

| 6935 | 181.0 | 1.0 | 0.0 | 19.0 | 16.0 | 6.0 | rating_c4 | 6.0 |

| 6936 | 182.0 | 0.0 | 0.0 | 19.0 | 16.0 | 1.0 | rating_c4 | 4.0 |

| 6937 | 183.0 | 1.0 | 0.0 | 18.0 | 16.0 | 9.0 | rating_c4 | 7.0 |

| 6938 | 184.0 | 0.0 | 0.0 | 19.0 | 16.0 | 8.0 | rating_c4 | 3.0 |

| 6939 | 187.0 | 1.0 | 0.0 | 19.0 | 16.0 | 5.0 | rating_c4 | 2.0 |

6940 rows × 8 columns

Notice that in the melt() call above, we’re treating not only the unique subject ID (uid) as an identifying variable, but also gender, experimental condition, age, and study name. Since these are all between-subject variables, these columns are all completely redundant with uid, and adding them does nothing to change the structure of our data. The point of explicitly listing them is just to keep them around in the dataset, so that we can easily add them to our models.

Fitting the model

Now that we’re all done with our (minimal) preprocessing, it’s time to fit the model! This turns out to be a snap in Bambi. We’ll begin with a very naive (and, as we’ll see later, incorrect) model that includes only the following terms:

- An overall (common) intercept.

- The common effect of experimental condition (“smiling” by holding a pen in one’s teeth vs. “pouting” by holding a pen in one’s lips). This is the primary variable of interest in the study.

- A group specific intercept for each of the 1,728 subjects in the ‘long’ dataset. (There were 2,576 subjects in the original dataset, but about 25% were excluded for various reasons, and we’re further excluding all subjects who lack complete data. As an exercise, you can try relaxing some of these criteria and re-fitting the models, though you’ll probably find that it makes no meaningful difference to the results.)

We’ll create a Bambi model, fit it, and store the results in a new object–which we can then interrogate in various ways.

# Initialize the model, passing in the dataset we want to use.

model = bmb.Model("value ~ condition + (1|uid)", long, dropna=True)

# Set a custom prior on group specific factor variances—just for illustration

group_specific_sd = bmb.Prior("HalfNormal", sigma=10)

group_specific_prior = bmb.Prior("Normal", mu=0, sigma=group_specific_sd)

model.set_priors(group_specific=group_specific_prior)

# Fit the model, drawing 1,000 MCMC draws per chain

results = model.fit(draws=1000)Automatically removing 9/6940 rows from the dataset.

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, Intercept, condition, 1|uid_sigma, 1|uid_offset]Sampling 4 chains for 1_000 tune and 1_000 draw iterations (4_000 + 4_000 draws total) took 9 seconds.Notice that, in Bambi, the common and group specific effects are specified in the same formula. This is the same convention used by other similar packages like brms.

Inspecting results

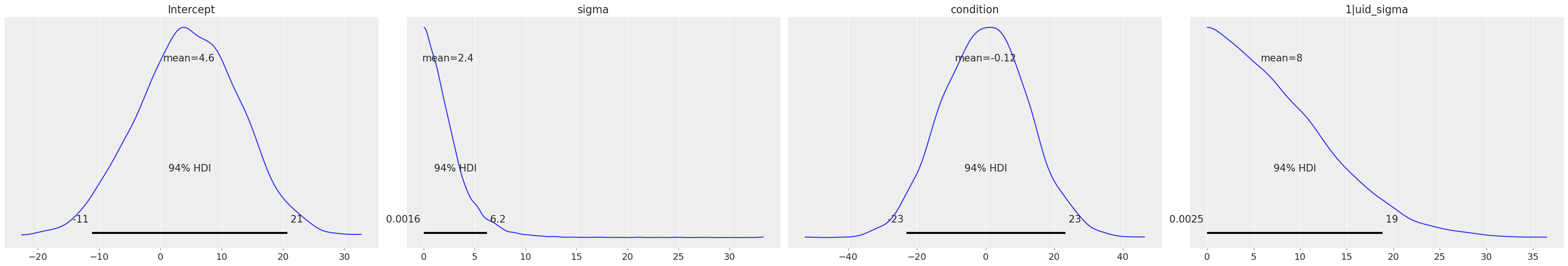

We can plot the prior distributions for all parameters with a call to the plot_priors() method.

model.plot_priors();Sampling: [1|uid_sigma, Intercept, condition, sigma]

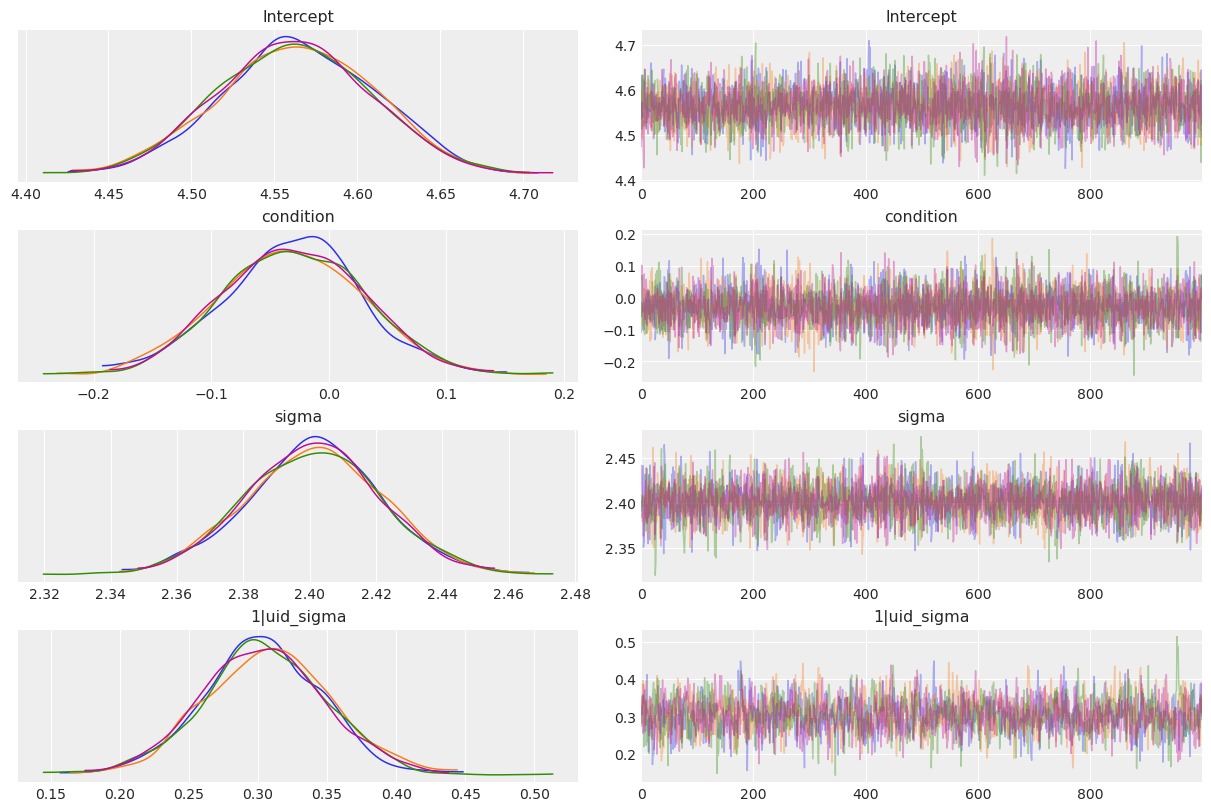

And we can easily get the posterior distributions with az.plot_trace(). We can select a subset of the parameters with the var_names arguments, like in the following cell. Or alternative by negating variables like var_names="~1|uid".

az.plot_trace(

results,

var_names=["Intercept", "condition", "sigma", "1|uid_sigma"],

compact=False,

);

If we want a numerical summary of the results, we just pass the results object to az.summary(). By default, summary shows the mean, standard deviation, and 94% highest density interval for the posterior. Summary also includes the Monte Carlo standard error, the effective sample size and the R-hat statistic.

az.summary(results, var_names=['Intercept', 'condition', 'sigma', '1|uid_sigma'])| mean | sd | hdi_3% | hdi_97% | mcse_mean | mcse_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|

| Intercept | 4.563 | 0.047 | 4.478 | 4.650 | 0.001 | 0.001 | 5176.0 | 3494.0 | 1.0 |

| condition | -0.030 | 0.060 | -0.147 | 0.076 | 0.001 | 0.001 | 5942.0 | 2962.0 | 1.0 |

| sigma | 2.401 | 0.020 | 2.362 | 2.439 | 0.000 | 0.000 | 5439.0 | 2764.0 | 1.0 |

| 1|uid_sigma | 0.305 | 0.045 | 0.217 | 0.388 | 0.001 | 0.001 | 1518.0 | 2202.0 | 1.0 |

Expanding the model

Looking at the parameter estimates produced by our model, it seems pretty clear that there’s no meaningful effect of condition. The posterior distribution is centered almost exactly on 0, with most of the probability mass on very small values. The 94% HDI spans from \(\approx -0.14\) to \(\approx 0.08\)–in other words, the plausible effect of the experimental manipulation is, at best, to produce a change of < 0.2 on cartoon ratings made on a 10-point scale. For perspective, the variation between subjects is enormous in comparison–the standard deviation for group specific effects 1|uid_sigma is around 0.3. We can also see that the model is behaving well, and the sampler seems to have converged nicely (the traces for all parameters look stationary).

Unfortunately, our first model has at least two pretty serious problems. First, it gives no consideration to between-study variation–we’re simply lumping all 1,728 subjects together, as if they came from the same study. A better model would properly account for study-level variation. We could model study as either a common or a group specific factor in this case–both choices are defensible, depending on whether we want to think of the 17 studies in this dataset as the only sites of interest, or as if they’re just 17 random sites drawn from some much larger population that have particular characteristics we want to account for.

For present purposes, we’ll adopt the latter strategy (as an exercise, you can modify the the code below and re-run the model with study as a common factor). We’ll “keep it maximal” by adding both group specific study intercepts and group specific study slopes to the model. That is, we’ll assume that the subjects at each research site have a different baseline appreciation of the cartoons (some find the cartoons funnier than others), and that the effect of condition also varies across sites.

Second, our model also fails to explicitly model variation in cartoon ratings that should properly be attributed to the 4 stimuli. In principle, our estimate of the common effect of condition could change somewhat once we correctly account for stimulus variability (though in practice, the net effect is almost always to reduce effects, not increase them–so in this case, it’s very unlikely that adding group specific stimulus effects will produce a meaningful effect of condition). So we’ll deal with this by adding specific intercepts for the 4 stimuli. We’ll model the stimuli as group specific effect, rather than common, because it wouldn’t make sense to think of these particular cartoons as exhausting the universe of stimuli we care about (i.e., we wouldn’t really care about the facial-feedback effect if we knew that it only applied to 4 specific Far Side cartoons, and no other stimuli).

Lastly, just for fun, we can throw in some additional covariates, since they’re readily available in the dataset, and may be of interest even if they don’t directly inform the core hypothesis. Specifically, we’ll add common effects of gender and age to the model, which will let us estimate the degree to which participants’ ratings of the cartoons varies as a function of these background variables.

Once we’ve done all that, we end up with a model that’s in a good position to answer the question we care about–namely, whether the smiling/pouting manipulation has an effect on cartoon ratings that generalizes across the subjects, studies, and stimuli found in the RRR dataset.

model = bmb.Model(

"value ~ condition + age + gender + (1|uid) + (condition|study) + (condition|stimulus)",

long,

dropna=True,

)

group_specific_sd = bmb.Prior("HalfNormal", sigma=4)

group_specific_prior = bmb.Prior("Normal", mu=0, sigma=group_specific_sd)

model.set_priors(group_specific=group_specific_prior)

# Not we use 2000 samples for tuning and increase the taget_accept to 0.99.

# The default values result in divergences.

results = model.fit(draws=1000, tune=2000, target_accept=0.99)Automatically removing 33/6940 rows from the dataset.

Initializing NUTS using jitter+adapt_diag...

Multiprocess sampling (4 chains in 4 jobs)

NUTS: [sigma, Intercept, condition, age, gender, 1|uid_sigma, 1|uid_offset, 1|study_sigma, 1|study_offset, condition|study_sigma, condition|study_offset, 1|stimulus_sigma, 1|stimulus_offset, condition|stimulus_sigma, condition|stimulus_offset]Sampling 4 chains for 2_000 tune and 1_000 draw iterations (8_000 + 4_000 draws total) took 445 seconds.

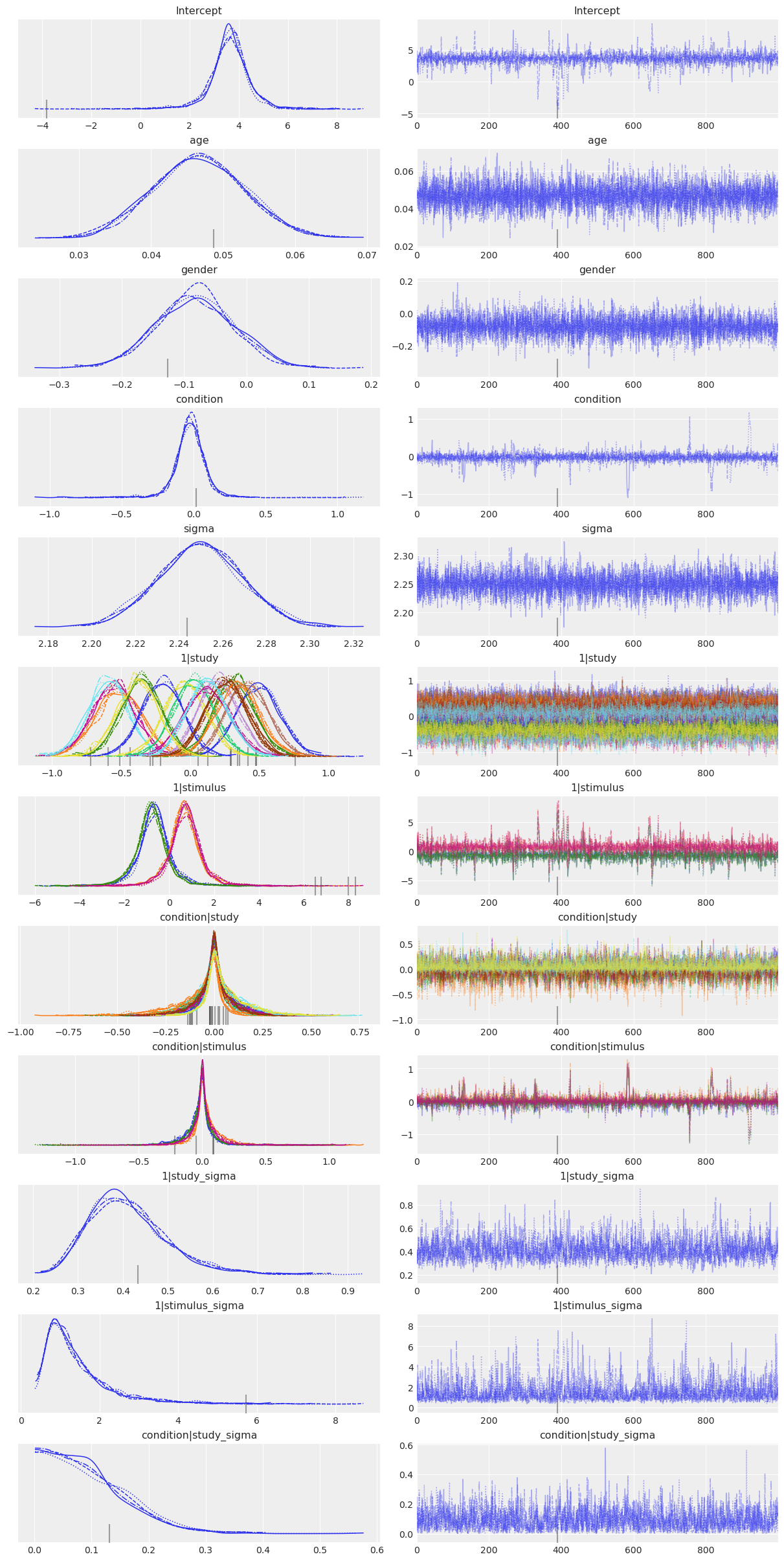

There was 1 divergence after tuning. Increase `target_accept` or reparameterize.az.plot_trace(

results,

var_names=[

'Intercept', 'age', 'gender', 'condition', 'sigma',

'1|study', '1|stimulus', 'condition|study', 'condition|stimulus',

'1|study_sigma', '1|stimulus_sigma', 'condition|study_sigma',

],

compact=True

);

And the answer is…

No. There’s still no discernible effect. Modeling the data using a mixed-effects model does highlight a number of other interesting features, however:

- The stimulus-level standard deviation

1|stimulus_sigmais quite large compared to the other factors. This is potentially problematic, because it suggests that a more conventional analysis that left individual stimulus effects out of the model could potentially run a high false positive rate. Note that this is a problem that affects both the RRR and the original Strack study equally; the moral of the story is to deliberately sample large numbers of stimuli and explicitly model their influence. - Older people seem to rate cartoons as being (a little bit) funnier.

- The variation across sites is surprisingly small–in terms of both the group specific intercepts (

1|study) and the group specific slopes (condition|study). In other words, the constitution of the sample, the gender of the experimenter, or any of the hundreds of others of between-site differences that one might conceivably have expected to matter, don’t really seem to make much of a difference to participants’ ratings of the cartoons.

%load_ext watermark

%watermark -n -u -v -iv -wLast updated: Sun Sep 28 2025

Python implementation: CPython

Python version : 3.13.7

IPython version : 9.4.0

bambi : 0.14.1.dev58+gb25742785.d20250928

arviz : 0.22.0

pandas: 2.3.2

Watermark: 2.5.0